BCA 3RD SEM COA NOTES 2023-24

UNIT 1ST

Classification of Computers

The computer systems can be classified on the following basis:

1. On the basis of size.

2. On the basis of functionality.

3. On the basis of data handling.

Attention reader! All those who say programming isn't for kids, just haven't met the right mentors yet. Join the Demo Class for First Step to Coding Course, specifically designed for students of class 8 to 12.

The students will get to learn more about the world of programming in these free classes which will definitely help them in making a wise career choice in the future.

Classification on the basis of size

1. Super computers : The super computers are the most high performing system. A supercomputer is a computer with a high level of performance compared to a general-purpose computer. The actual Performance of a supercomputer is measured in FLOPS instead of MIPS. All of the world’s fastest 500 supercomputers run Linux-based operating systems. Additional research is being conducted in China, the US, the EU, Taiwan and Japan to build even faster, more high performing and more technologically superior supercomputers. Supercomputers actually play an important role in the field of computation, and are used for intensive computation tasks in various fields, including quantum mechanics, weather forecasting, climate research, oil and gas exploration, molecular modeling, and physical simulations. and also Throughout the history, supercomputers have been essential in the field of the cryptanalysis.

eg: PARAM, jaguar, roadrunner.

2. Mainframe computers : These are commonly called as big iron, they are usually used by big organisations for bulk data processing such as statics, census data processing, transaction processing and are widely used as the servers as these systems has a higher processing capability as compared to the other classes of computers, most of these mainframe architectures were established in 1960s, the research and development worked continuously over the years and the mainframes of today are far more better than the earlier ones, in size, capacity and efficiency.

Eg: IBM z Series, System z9 and System z10 servers.

3. Mini computers : These computers came into the market in mid 1960s and were sold at a much cheaper price than the main frames, they were actually designed for control, instrumentation, human interaction, and communication switching as distinct from calculation and record keeping, later they became very popular for personal uses with evolution.

In the 60s to describe the smaller computers that became possible with the use of transistors and core memory technologies, minimal instructions sets and less expensive peripherals such as the ubiquitous Teletype Model 33 ASR.They usually took up one or a few inch rack cabinets, compared with the large mainframes that could fill a room, there was a new term “MINICOMPUTERS” coined

Eg: Personal Laptop, PC etc.

4. Micro computers : A microcomputer is a small, relatively inexpensive computer with a microprocessor as its CPU. It includes a microprocessor, memory, and minimal I/O circuitry mounted on a single printed circuit board.The previous to these computers, mainframes and minicomputers, were comparatively much larger, hard to maintain and more expensive. They actually formed the foundation for present day microcomputers and smart gadgets that we use in day to day life.

Eg: Tablets, Smartwatches.

Classification on the basis of functionality

1. Servers : Servers are nothing but dedicated computers which are set-up to offer some services to the clients. They are named depending on the type of service they offered. Eg: security server, database server.

2. Workstation : Those are the computers designed to primarily to be used by single user at a time. They run multi-user operating systems. They are the ones which we use for our day to day personal / commercial work.

3. Information Appliances : They are the portable devices which are designed to perform a limited set of tasks like basic calculations, playing multimedia, browsing internet etc. They are generally referred as the mobile devices. They have very limited memory and flexibility and generally run on “as-is” basis.

4. Embedded computers : They are the computing devices which are used in other machines to serve limited set of requirements. They follow instructions from the non-volatile memory and they are not required to execute reboot or reset. The processing units used in such device work to those basic requirements only and are different from the ones that are used in personal computers- better known as workstations.

Classification on the basis of data handling

1. Analog : An analog computer is a form of computer that uses the continuously-changeable aspects of physical fact such as electrical, mechanical, or hydraulic quantities to model the problem being solved. Any thing that is variable with respect to time and continuous can be claimed as analog just like an analog clock measures time by means of the distance traveled for the spokes of the clock around the circular dial.

2. Digital : A computer that performs calculations and logical operations with quantities represented as digits, usually in the binary number system of “0” and “1”, “Computer capable of solving problems by processing information expressed in discrete form. from manipulation of the combinations of the binary digits, it can perform mathematical calculations, organize and analyze data, control industrial and other processes, and simulate dynamic systems such as global weather patterns.

3. Hybrid : A computer that processes both analog and digital data, Hybrid computer is a digital computer that accepts analog signals, converts them to digital and processes them in digital form.

Introduction of ALU

Representing and storing numbers were the basic operation of the computers of earlier times. The real go came when computation, manipulating numbers like adding, multiplying came into the picture. These operations are handled by the computer’s arithmetic logic unit (ALU). The ALU is the mathematical brain of a computer. The first ALU was INTEL 74181 implemented as a 7400 series is a TTL integrated circuit that was released in 1970.

The ALU is a digital circuit that provides arithmetic and logic operations. It is the fundamental building block of the central processing unit of a computer. A modern CPU has a very powerful ALU and it is complex in design. In addition to ALU modern CPU contains a control unit and a set of registers. Most of the operations are performed by one or more ALU’s, which load data from the input register. Registers are a small amount of storage available to the CPU. These registers can be accessed very fast. The control unit tells ALU what operation to perform on the available data. After calculation/manipulation, the ALU stores the output in an output register.

Introduction of Control Unit and its Design

Difficulty Level : Hard

Last Updated : 22 Aug, 2019

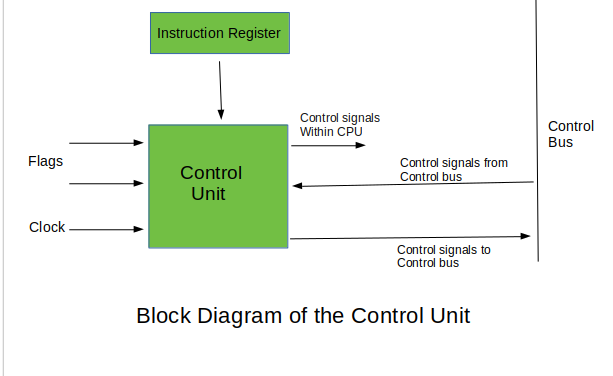

Control Unit is the part of the computer’s central processing unit (CPU), which directs the operation of the processor. It was included as part of the Von Neumann Architecture by John von Neumann. It is the responsibility of the Control Unit to tell the computer’s memory, arithmetic/logic unit and input and output devices how to respond to the instructions that have been sent to the processor. It fetches internal instructions of the programs from the main memory to the processor instruction register, and based on this register contents, the control unit generates a control signal that supervises the execution of these instructions.

A control unit works by receiving input information to which it converts into control signals, which are then sent to the central processor. The computer’s processor then tells the attached hardware what operations to perform. The functions that a control unit performs are dependent on the type of CPU because the architecture of CPU varies from manufacturer to manufacturer. Examples of devices that require a CU are:

Attention reader! Don’t stop learning now. Practice GATE exam well before the actual exam with the subject-wise and overall quizzes available in GATE Test Series Course.

Learn all GATE CS concepts with Free Live Classes on our youtube channel.

Control Processing Units(CPUs)

Graphics Processing Units(GPUs)

Functions of the Control Unit –

It coordinates the sequence of data movements into, out of, and between a processor’s many sub-units.

It interprets instructions.

It controls data flow inside the processor.

It receives external instructions or commands to which it converts to sequence of control signals.

It controls many execution units(i.e. ALU, data buffers and registers) contained within a CPU.

It also handles multiple tasks, such as fetching, decoding, execution handling and storing results.

Types of Control Unit –

There are two types of control units: Hardwired control unit and Microprogrammable control unit.

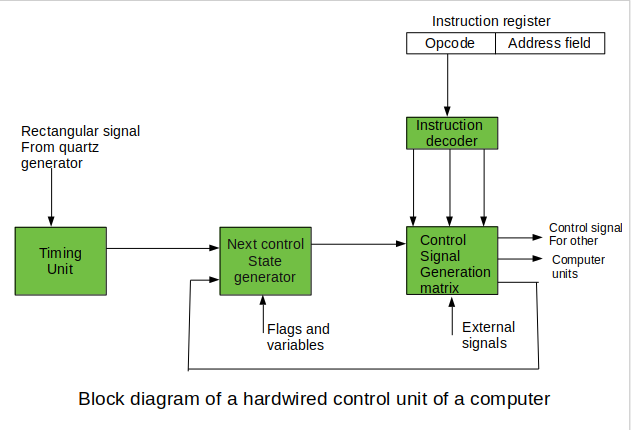

Hardwired Control Unit –

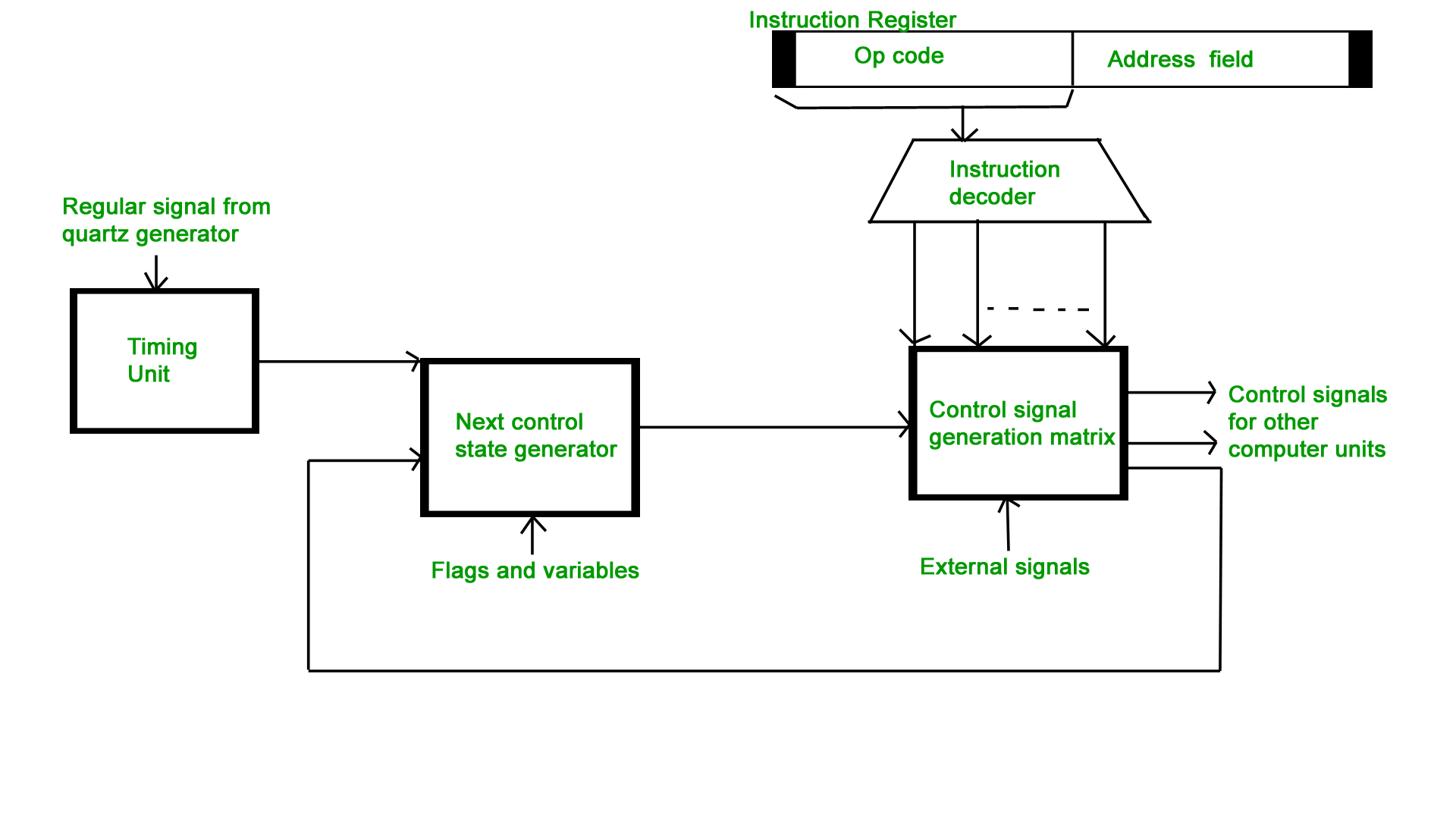

In the Hardwired control unit, the control signals that are important for instruction execution control are generated by specially designed hardware logical circuits, in which we can not modify the signal generation method without physical change of the circuit structure. The operation code of an instruction contains the basic data for control signal generation. In the instruction decoder, the operation code is decoded. The instruction decoder constitutes a set of many decoders that decode different fields of the instruction opcode.

As a result, few output lines going out from the instruction decoder obtains active signal values. These output lines are connected to the inputs of the matrix that generates control signals for executive units of the computer. This matrix implements logical combinations of the decoded signals from the instruction opcode with the outputs from the matrix that generates signals representing consecutive control unit states and with signals coming from the outside of the processor, e.g. interrupt signals. The matrices are built in a similar way as a programmable logic arrays.

Control signals for an instruction execution have to be generated not in a single time point but during the entire time interval that corresponds to the instruction execution cycle. Following the structure of this cycle, the suitable sequence of internal states is organized in the control unit.

A number of signals generated by the control signal generator matrix are sent back to inputs of the next control state generator matrix. This matrix combines these signals with the timing signals, which are generated by the timing unit based on the rectangular patterns usually supplied by the quartz generator. When a new instruction arrives at the control unit, the control units is in the initial state of new instruction fetching. Instruction decoding allows the control unit enters the first state relating execution of the new instruction, which lasts as long as the timing signals and other input signals as flags and state information of the computer remain unaltered. A change of any of the earlier mentioned signals stimulates the change of the control unit state.

This causes that a new respective input is generated for the control signal generator matrix. When an external signal appears, (e.g. an interrupt) the control unit takes entry into a next control state that is the state concerned with the reaction to this external signal (e.g. interrupt processing). The values of flags and state variables of the computer are used to select suitable states for the instruction execution cycle.

The last states in the cycle are control states that commence fetching the next instruction of the program: sending the program counter content to the main memory address buffer register and next, reading the instruction word to the instruction register of computer. When the ongoing instruction is the stop instruction that ends program execution, the control unit enters an operating system state, in which it waits for a next user directive.

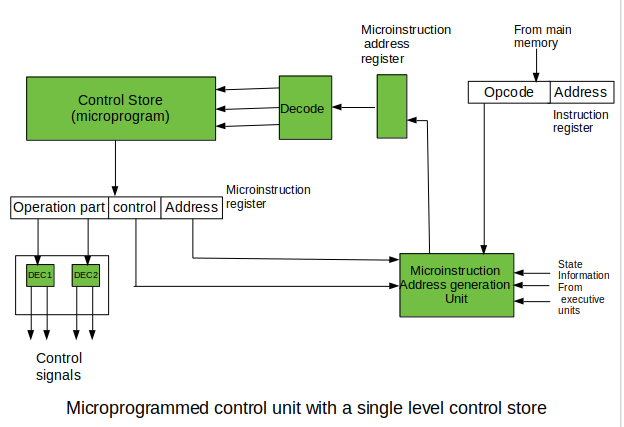

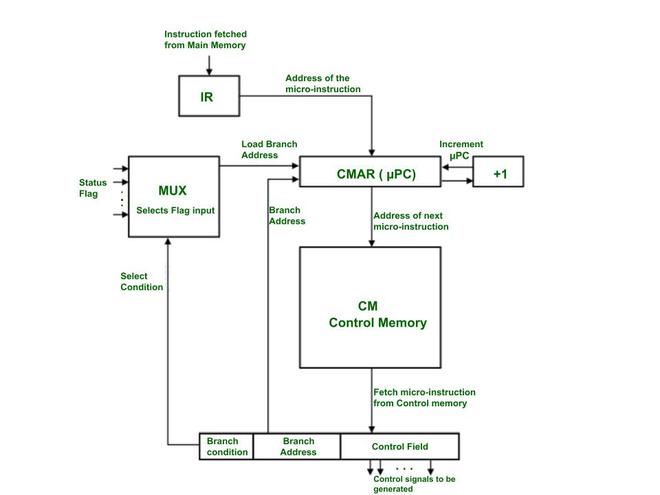

Microprogrammable control unit –

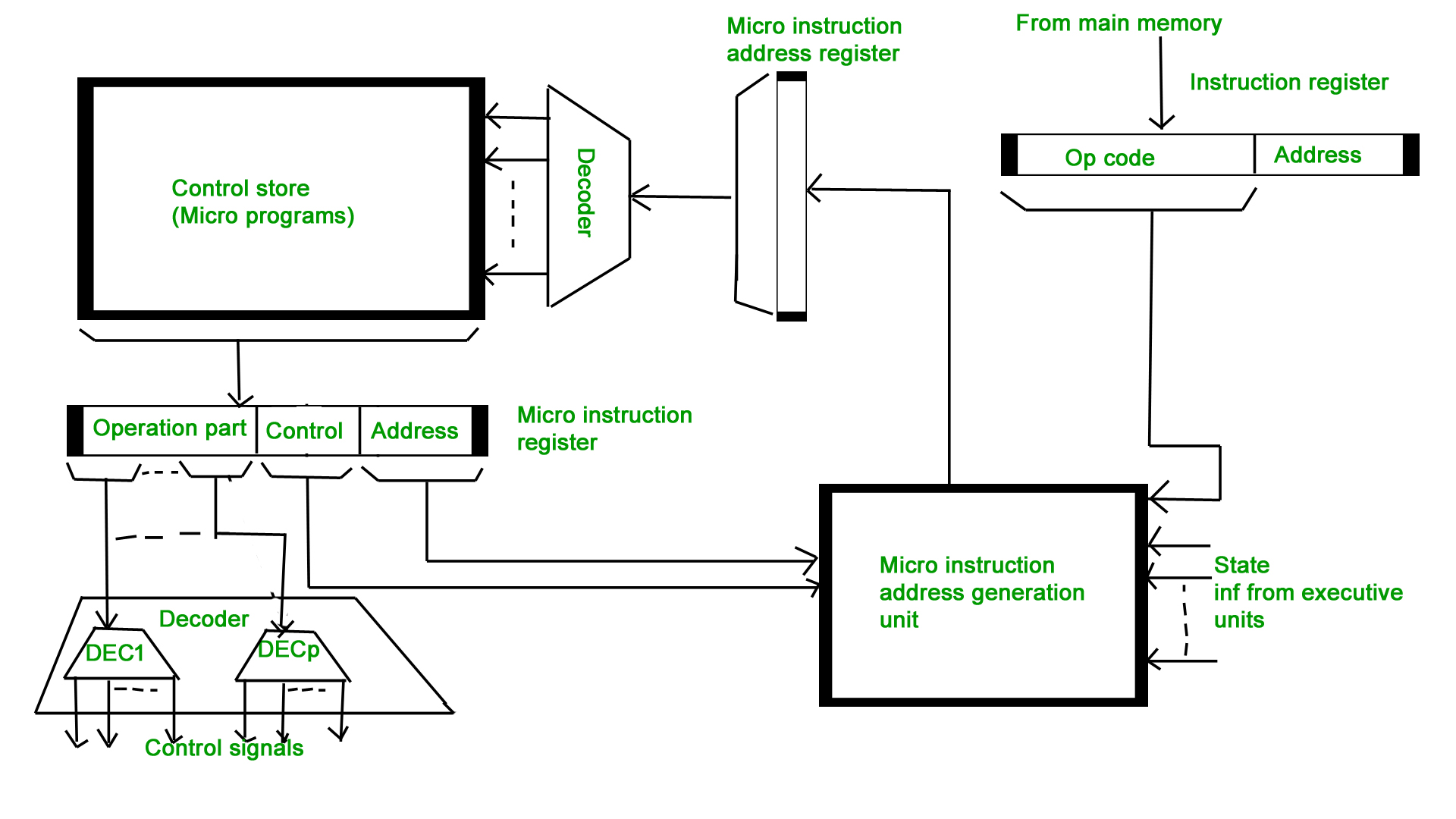

The fundamental difference between these unit structures and the structure of the hardwired control unit is the existence of the control store that is used for storing words containing encoded control signals mandatory for instruction execution.

In microprogrammed control units, subsequent instruction words are fetched into the instruction register in a normal way. However, the operation code of each instruction is not directly decoded to enable immediate control signal generation but it comprises the initial address of a microprogram contained in the control store.

With a single-level control store:

In this, the instruction opcode from the instruction register is sent to the control store address register. Based on this address, the first microinstruction of a microprogram that interprets execution of this instruction is read to the microinstruction register. This microinstruction contains in its operation part encoded control signals, normally as few bit fields. In a set microinstruction field decoders, the fields are decoded. The microinstruction also contains the address of the next microinstruction of the given instruction microprogram and a control field used to control activities of the microinstruction address generator.

The last mentioned field decides the addressing mode (addressing operation) to be applied to the address embedded in the ongoing microinstruction. In microinstructions along with conditional addressing mode, this address is refined by using the processor condition flags that represent the status of computations in the current program. The last microinstruction in the instruction of the given microprogram is the microinstruction that fetches the next instruction from the main memory to the instruction register.

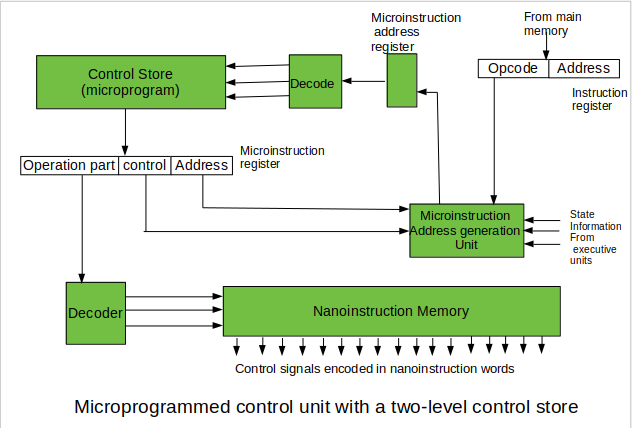

With a two-level control store:

In this, in a control unit with a two-level control store, besides the control memory for microinstructions, a nano-instruction memory is included. In such a control unit, microinstructions do not contain encoded control signals. The operation part of microinstructions contains the address of the word in the nano-instruction memory, which contains encoded control signals. The nano-instruction memory contains all combinations of control signals that appear in microprograms that interpret the complete instruction set of a given computer, written once in the form of nano-instructions.

In this way, unnecessary storing of the same operation parts of microinstructions is avoided. In this case, microinstruction word can be much shorter than with the single level control store. It gives a much smaller size in bits of the microinstruction memory and, as a result, a much smaller size of the entire control memory. The microinstruction memory contains the control for selection of consecutive microinstructions, while those control signals are generated at the basis of nano-instructions. In nano-instructions, control signals are frequently encoded using 1 bit/ 1 signal method that eliminates decoding.

Von Neumann Architecture

Von Neumann architecture was first published by John von Neumann in 1945.

His computer architecture design consists of a Control Unit, Arithmetic and Logic Unit (ALU), Memory Unit, Registers and Inputs/Outputs.

Von Neumann architecture is based on the stored-program computer concept, where instruction data and program data are stored in the same memory. This design is still used in most computers produced today.

Central Processing Unit (CPU)

The Central Processing Unit (CPU) is the electronic circuit responsible for executing the instructions of a computer program.

It is sometimes referred to as the microprocessor or processor.

The CPU contains the ALU, CU and a variety of registers.

Registers

Registers are high speed storage areas in the CPU. All data must be stored in a register before it can be processed.

MAR Memory Address Register Holds the memory location of data that needs to be accessed

MDR Memory Data Register Holds data that is being transferred to or from memory

AC Accumulator Where intermediate arithmetic and logic results are stored

PC Program Counter Contains the address of the next instruction to be executed

CIR Current Instruction Register Contains the current instruction during processing

Arithmetic and Logic Unit (ALU)

The ALU allows arithmetic (add, subtract etc) and logic (AND, OR, NOT etc) operations to be carried out.

Control Unit (CU)

The control unit controls the operation of the computer’s ALU, memory and input/output devices, telling them how to respond to the program instructions it has just read and interpreted from the memory unit.

The control unit also provides the timing and control signals required by other computer components.

Buses

Buses are the means by which data is transmitted from one part of a computer to another, connecting all major internal components to the CPU and memory.

A standard CPU system bus is comprised of a control bus, data bus and address bus.

Address Bus Carries the addresses of data (but not the data) between the processor and memory

Data Bus Carries data between the processor, the memory unit and the input/output devices

Control Bus Carries control signals/commands from the CPU (and status signals from other devices) in order to control and coordinate all the activities within the computer

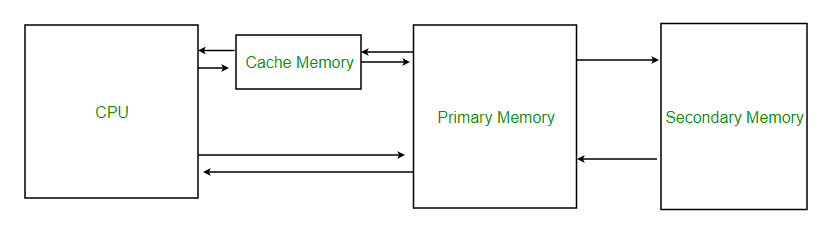

Memory Unit

The memory unit consists of RAM, sometimes referred to as primary or main memory. Unlike a hard drive (secondary memory), this memory is fast and also directly accessible by the CPU.

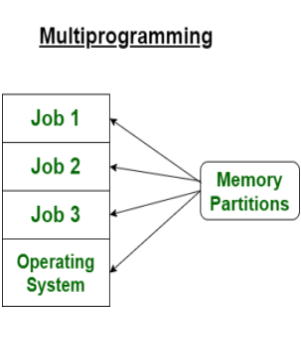

RAM is split into partitions. Each partition consists of an address and its contents (both in binary form).

The address will uniquely identify every location in the memory.

Loading data from permanent memory (hard drive), into the faster and directly accessible temporary memory (RAM), allows the CPU to operate much quicker.

Introduction of Floating Point Representation

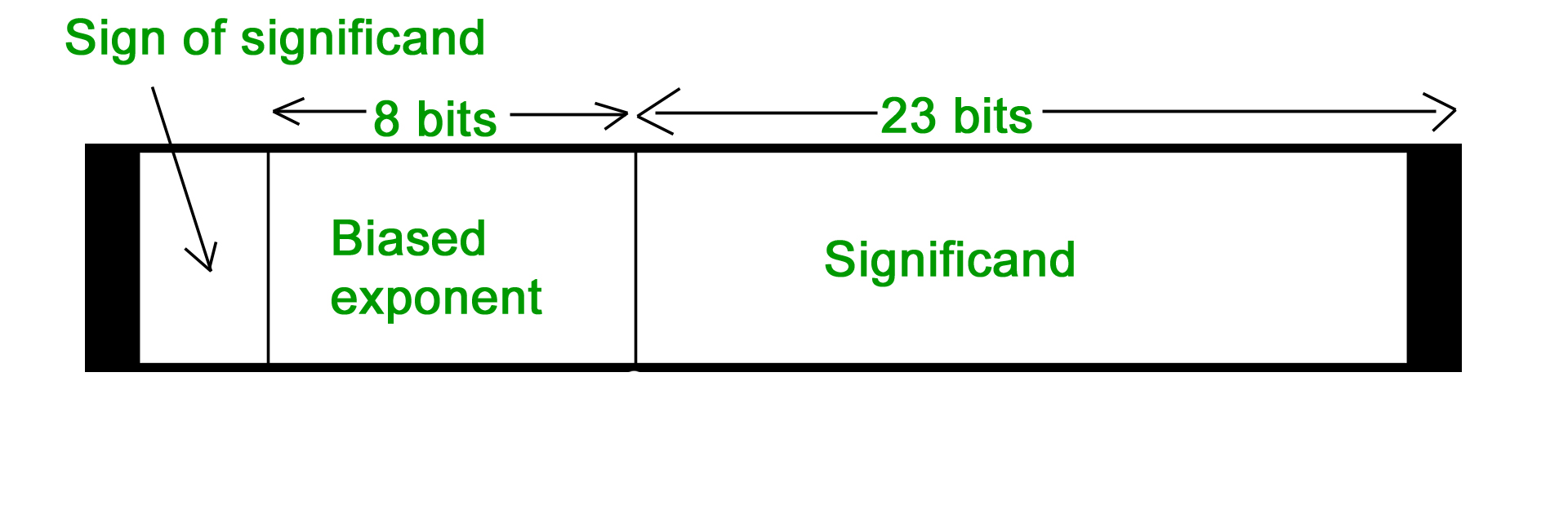

1. To convert the floating point into decimal, we have 3 elements in a 32-bit floating point representation:

i) Sign

ii) Exponent

iii) Mantissa

Attention reader! Don’t stop learning now. Get hold of all the important CS Theory concepts for SDE interviews with the CS Theory Course at a student-friendly price and become industry ready.

Sign bit is the first bit of the binary representation. ‘1’ implies negative number and ‘0’ implies positive number.

Example: 11000001110100000000000000000000 This is negative number.

Exponent is decided by the next 8 bits of binary representation. 127 is the unique number for 32 bit floating point representation. It is known as bias. It is determined by 2k-1 -1 where ‘k’ is the number of bits in exponent field.

There are 3 exponent bits in 8-bit representation and 8 exponent bits in 32-bit representation.

Thus

bias = 3 for 8 bit conversion (23-1 -1 = 4-1 = 3)

bias = 127 for 32 bit conversion. (28-1 -1 = 128-1 = 127)

Example: 01000001110100000000000000000000

10000011 = (131)10

131-127 = 4

Hence the exponent of 2 will be 4 i.e. 24 = 16.

Mantissa is calculated from the remaining 23 bits of the binary representation. It consists of ‘1’ and a fractional part which is determined by:

Example:

01000001110100000000000000000000

The fractional part of mantissa is given by:

1*(1/2) + 0*(1/4) + 1*(1/8) + 0*(1/16) +……… = 0.625

Thus the mantissa will be 1 + 0.625 = 1.625

The decimal number hence given as: Sign*Exponent*Mantissa = (-1)0*(16)*(1.625) = 26

2. To convert the decimal into floating point, we have 3 elements in a 32-bit floating point representation:

i) Sign (MSB)

ii) Exponent (8 bits after MSB)

iii) Mantissa (Remaining 23 bits)

Sign bit is the first bit of the binary representation. ‘1’ implies negative number and ‘0’ implies positive number.

Example: To convert -17 into 32-bit floating point representation Sign bit = 1

Exponent is decided by the nearest smaller or equal to 2n number. For 17, 16 is the nearest 2n. Hence the exponent of 2 will be 4 since 24 = 16. 127 is the unique number for 32 bit floating point representation. It is known as bias. It is determined by 2k-1 -1 where ‘k’ is the number of bits in exponent field.

Thus bias = 127 for 32 bit. (28-1 -1 = 128-1 = 127)

Now, 127 + 4 = 131 i.e. 10000011 in binary representation.

Mantissa: 17 in binary = 10001.

Move the binary point so that there is only one bit from the left. Adjust the exponent of 2 so that the value does not change. This is normalizing the number. 1.0001 x 24. Now, consider the fractional part and represented as 23 bits by adding zeros.

00010000000000000000000

Hardware Implementation

The hardware implementation of logic rnicrooperations requires that logic gates be inserted for each bit or pair of bits in the registers to perform the required logic function. Although there are 16 logic rnicrooperations, most computers use only four-AND, OR, XOR (exclusive-OR), and complementfrom which all others can be derived.

logic circuit: Figure 4-10 shows one stage of a circuit that generates the four basic logic rnicrooperations . It consists of four gates and a multiplexer. Each of the four logic operations is generated through a gate that performs the required logic. The outputs of the gates are applied to the data inputs of the multiplexer. The two selection inputs 51 and 50 choose one of the data inputs of the multiplexer and direct its value to the output. The diagram shows one typical stage with subscript i. For a logic circuit with n bits, the diagram must be repeated n times for i = 0, 1, 2, ... , n - 1. The selection variables are applied to all stages. The function table in Fig. 4-10(b) lists the logic rnicrooperations obtained for each combination of the selection variables.

Booth’s Algorithm

Booth algorithm gives a procedure for multiplying binary integers in signed 2’s complement representation in efficient way, i.e., less number of additions/subtractions required. It operates on the fact that strings of 0’s in the multiplier require no addition but just shifting and a string of 1’s in the multiplier from bit weight 2^k to weight 2^m can be treated as 2^(k+1 ) to 2^m.

As in all multiplication schemes, booth algorithm requires examination of the multiplier bits and shifting of the partial product. Prior to the shifting, the multiplicand may be added to the partial product, subtracted from the partial product, or left unchanged according to following rules:

Attention reader! Don’t stop learning now. Practice GATE exam well before the actual exam with the subject-wise and overall quizzes available in GATE Test Series Course.

Learn all GATE CS concepts with Free Live Classes on our youtube channel.

The multiplicand is subtracted from the partial product upon encountering the first least significant 1 in a string of 1’s in the multiplier

The multiplicand is added to the partial product upon encountering the first 0 (provided that there was a previous ‘1’) in a string of 0’s in the multiplier.

The partial product does not change when the multiplier bit is identical to the previous multiplier bit.

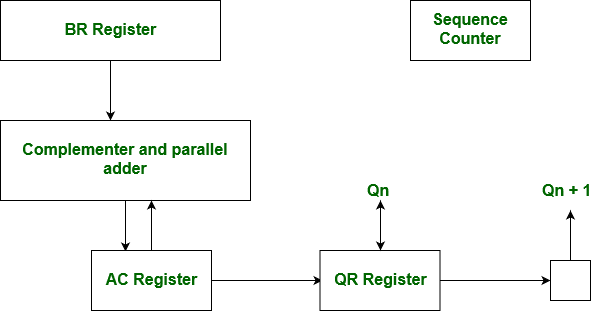

Hardware Implementation of Booths Algorithm – The hardware implementation of the booth algorithm requires the register configuration shown in the figure below.

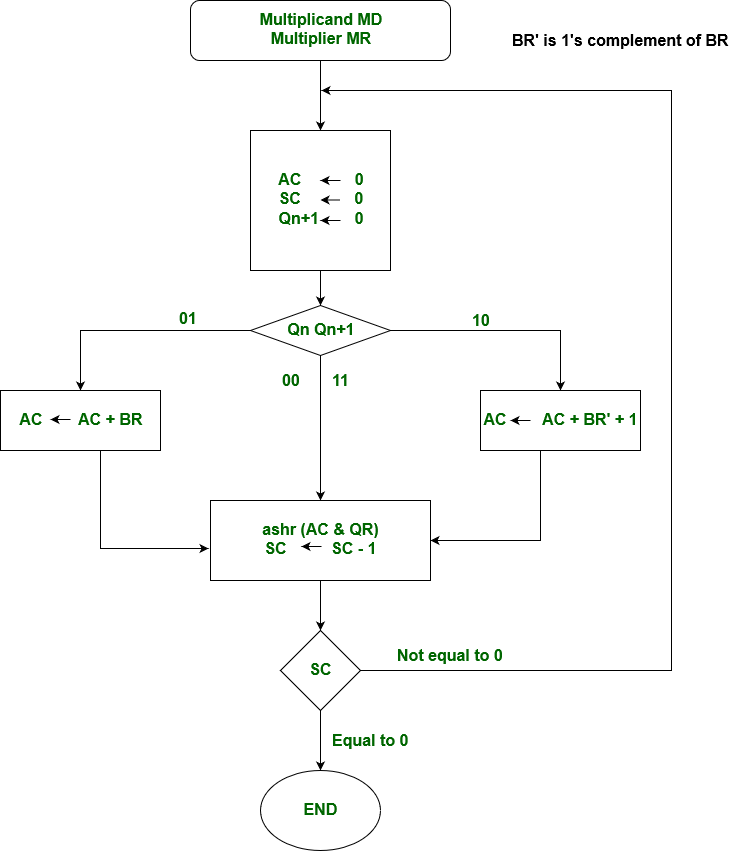

Booth’s Algorithm Flowchart –

We name the register as A, B and Q, AC, BR and QR respectively. Qn designates the least significant bit of multiplier in the register QR. An extra flip-flop Qn+1is appended to QR to facilitate a double inspection of the multiplier.The flowchart for the booth algorithm is shown below.

AC and the appended bit Qn+1 are initially cleared to 0 and the sequence SC is set to a number n equal to the number of bits in the multiplier. The two bits of the multiplier in Qn and Qn+1are inspected. If the two bits are equal to 10, it means that the first 1 in a string has been encountered. This requires subtraction of the multiplicand from the partial product in AC. If the 2 bits are equal to 01, it means that the first 0 in a string of 0’s has been encountered. This requires the addition of the multiplicand to the partial product in AC.

When the two bits are equal, the partial product does not change. An overflow cannot occur because the addition and subtraction of the multiplicand follow each other. As a consequence, the 2 numbers that are added always have a opposite signs, a condition that excludes an overflow. The next step is to shift right the partial product and the multiplier (including Qn+1). This is an arithmetic shift right (ashr) operation which AC and QR ti the right and leaves the sign bit in AC unchanged. The sequence counter is decremented and the computational loop is repeated n times.

Example – A numerical example of booth’s algorithm is shown below for n = 4. It shows the step by step multiplication of -5 and -7.MD = -5 = 1011, MD = 1011, MD'+1 = 0101 MR = -7 = 1001 The explanation of first step is as follows: Qn+1 AC = 0000, MR = 1001, Qn+1 = 0, SC = 4 Qn Qn+1 = 10 So, we do AC + (MD)'+1, which gives AC = 0101 On right shifting AC and MR, we get AC = 0010, MR = 1100 and Qn+1 = 1

Accumulator:

The accumulator is an 8-bit register (can store 8-bit data) that is the part of the arithmetic and logical unit (ALU). After performing arithmetical or logical operations, the result is stored in accumulator. Accumulator is also defined as register A.

Computer Organization | RISC and CISC

Reduced Instruction Set Architecture (RISC) –

The main idea behind is to make hardware simpler by using an instruction set composed of a few basic steps for loading, evaluating, and storing operations just like a load command will load data, store command will store the data.

Complex Instruction Set Architecture (CISC) –

The main idea is that a single instruction will do all loading, evaluating, and storing operations just like a multiplication command will do stuff like loading data, evaluating, and storing it, hence it’s complex.

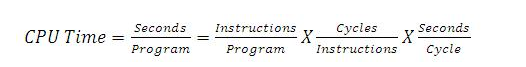

Both approaches try to increase the CPU performance

RISC: Reduce the cycles per instruction at the cost of the number of instructions per program.

CISC: The CISC approach attempts to minimize the number of instructions per program but at the cost of increase in number of cycles per instruction.

Earlier when programming was done using assembly language, a need was felt to make instruction do more tasks because programming in assembly was tedious and error-prone due to which CISC architecture evolved but with the uprise of high-level language dependency on assembly reduced RISC architecture prevailed.

Characteristic of RISC –

Simpler instruction, hence simple instruction decoding.

Instruction comes undersize of one word.

Instruction takes a single clock cycle to get executed.

More general-purpose registers.

Simple Addressing Modes.

Less Data types.

Pipeline can be achieved.

Characteristic of CISC –

Complex instruction, hence complex instruction decoding.

Instructions are larger than one-word size.

Instruction may take more than a single clock cycle to get executed.

Less number of general-purpose registers as operation get performed in memory itself.

Complex Addressing Modes.

More Data types.

Example – Suppose we have to add two 8-bit number:

CISC approach: There will be a single command or instruction for this like ADD which will perform the task.

RISC approach: Here programmer will write the first load command to load data in registers then it will use a suitable operator and then it will store the result in the desired location.

So, add operation is divided into parts i.e. load, operate, store due to which RISC programs are longer and require more memory to get stored but require fewer transistors due to less complex command.

Difference –

RISCCISCFocus on software Focus on hardware

Uses only Hardwired control unit Uses both hardwired and micro programmed control unit

Transistors are used for more registers Transistors are used for storing complex

Instructions

Fixed sized instructions Variable sized instructions

Can perform only Register to Register Arithmetic operations Can perform REG to REG or REG to MEM or MEM to MEM

Requires more number of registers Requires less number of registers

Code size is large Code size is small

An instruction execute in a single clock cycle Instruction takes more than one clock cycle

An instruction fit in

one word Instructions are larger than the size of one word

Computer Organization | Instruction Formats (Zero, One, Two and Three Address Instruction)

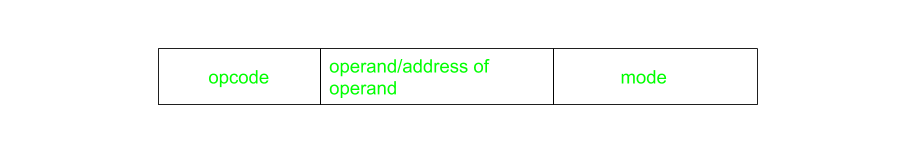

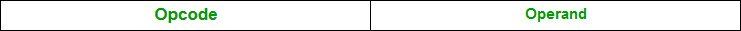

A computer performs a task based on the instruction provided. Instruction in computers comprises groups called fields. These fields contain different information as for computers everything is in 0 and 1 so each field has different significance based on which a CPU decides what to perform. The most common fields are:

Operation field specifies the operation to be performed like addition.

Address field which contains the location of the operand, i.e., register or memory location.

Mode field which specifies how operand is to be founded.

Instruction is of variable length depending upon the number of addresses it contains. Generally, CPU organization is of three types based on the number of address fields:

Single Accumulator organization

General register organization

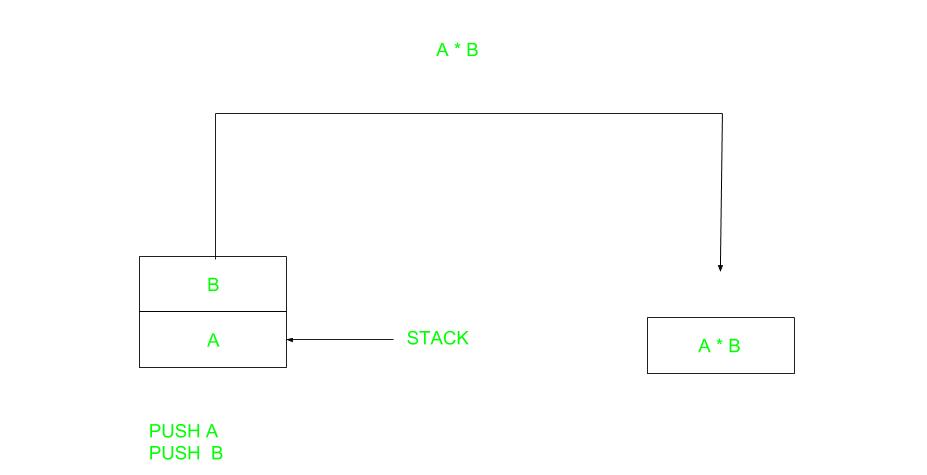

Stack organization

In the first organization, the operation is done involving a special register called the accumulator. In second on multiple registers are used for the computation purpose. In the third organization the work on stack basis operation due to which it does not contain any address field. Only a single organization doesn’t need to be applied, a blend of various organizations is mostly what we see generally.

Based on the number of address, instructions are classified as:

Note that we will use X = (A+B)*(C+D) expression to showcase the procedure.

Zero Address Instructions –

A stack-based computer does not use the address field in the instruction. To evaluate an expression first it is converted to reverse Polish Notation i.e. Postfix Notation. Expression: X = (A+B)*(C+D) Postfixed : X = AB+CD+* TOP means top of stack M[X] is any memory location

PUSH A TOP = A

PUSH B TOP = B

ADD TOP = A+B

PUSH C TOP = C

PUSH D TOP = D

ADD TOP = C+D

MUL TOP = (C+D)*(A+B)

POP X M[X] = TOP

2 .One Address Instructions –

This uses an implied ACCUMULATOR register for data manipulation. One operand is in the accumulator and the other is in the register or memory location. Implied means that the CPU already knows that one operand is in the accumulator so there is no need to specify it.

Expression: X = (A+B)*(C+D)

AC is accumulator

M[] is any memory location

M[T] is temporary location

Expression: X = (A+B)*(C+D)

AC is accumulator

M[] is any memory location

M[T] is temporary locationLOAD A AC = M[A]

ADD B AC = AC + M[B]

STORE T M[T] = AC

LOAD C AC = M[C]

ADD D AC = AC + M[D]

MUL T AC = AC * M[T]

STORE X M[X] = AC

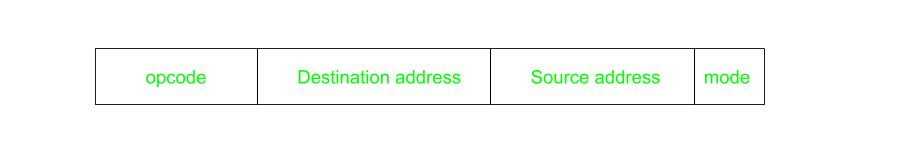

3.Two Address Instructions –

This is common in commercial computers. Here two addresses can be specified in the instruction. Unlike earlier in one address instruction, the result was stored in the accumulator, here the result can be stored at different locations rather than just accumulators, but require more number of bit to represent address.

Here destination address can also contain operand. Expression: X = (A+B)*(C+D) R1, R2 are registers M[] is any memory location

MOV R1, A R1 = M[A]

ADD R1, B R1 = R1 + M[B]

MOV R2, C R2 = C

ADD R2, D R2 = R2 + D

MUL R1, R2 R1 = R1 * R2

MOV X, R1 M[X] = R1

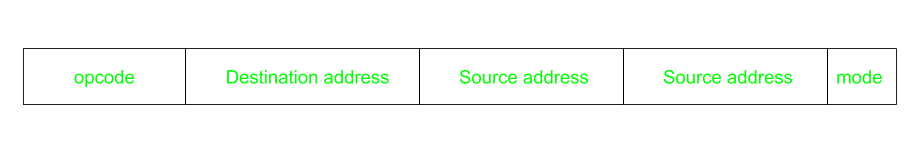

4.Three Address Instructions –

This has three address field to specify a register or a memory location. Program created are much short in size but number of bits per instruction increase. These instructions make creation of program much easier but it does not mean that program will run much faster because now instruction only contain more information but each micro operation (changing content of register, loading address in address bus etc.) will be performed in one cycle only.

Expression: X = (A+B)*(C+D)

R1, R2 are registers

M[] is any memory location

Expression: X = (A+B)*(C+D)

R1, R2 are registers

M[] is any memory locationADD R1, A, B R1 = M[A] + M[B]

ADD R2, C, D R2 = M[C] + M[D]

MUL X, R1, R2 M[X] = R1 * R2

UNIT 2

Introduction to Parallel Computing

Before taking a toll on Parallel Computing, first, let’s take a look at the background of computations of computer software and why it failed for the modern era.

Computer software was written conventionally for serial computing. This meant that to solve a problem, an algorithm divides the problem into smaller instructions. These discrete instructions are then executed on the Central Processing Unit of a computer one by one. Only after one instruction is finished, next one starts.

A real-life example of this would be people standing in a queue waiting for a movie ticket and there is only a cashier. The cashier is giving tickets one by one to the persons. The complexity of this situation increases when there are 2 queues and only one cashier.

So, in short, Serial Computing is following:

In this, a problem statement is broken into discrete instructions.

Then the instructions are executed one by one.

Only one instruction is executed at any moment of time.

Look at point 3. This was causing a huge problem in the computing industry as only one instruction was getting executed at any moment of time. This was a huge waste of hardware resources as only one part of the hardware will be running for particular instruction and of time. As problem statements were getting heavier and bulkier, so does the amount of time in execution of those statements. Examples of processors are Pentium 3 and Pentium 4.

Now let’s come back to our real-life problem. We could definitely say that complexity will decrease when there are 2 queues and 2 cashiers giving tickets to 2 persons simultaneously. This is an example of Parallel Computing.

Parallel Computing :

It is the use of multiple processing elements simultaneously for solving any problem. Problems are broken down into instructions and are solved concurrently as each resource that has been applied to work is working at the same time.

Advantages of Parallel Computing over Serial Computing are as follows:

It saves time and money as many resources working together will reduce the time and cut potential costs.

It can be impractical to solve larger problems on Serial Computing.

It can take advantage of non-local resources when the local resources are finite.

Serial Computing ‘wastes’ the potential computing power, thus Parallel Computing makes better work of the hardware.

Types of Parallelism:

Bit-level parallelism –

It is the form of parallel computing which is based on the increasing processor’s size. It reduces the number of instructions that the system must execute in order to perform a task on large-sized data.

Example: Consider a scenario where an 8-bit processor must compute the sum of two 16-bit integers. It must first sum up the 8 lower-order bits, then add the 8 higher-order bits, thus requiring two instructions to perform the operation. A 16-bit processor can perform the operation with just one instruction.

Instruction-level parallelism –

A processor can only address less than one instruction for each clock cycle phase. These instructions can be re-ordered and grouped which are later on executed concurrently without affecting the result of the program. This is called instruction-level parallelism.

Task Parallelism –

Task parallelism employs the decomposition of a task into subtasks and then allocating each of the subtasks for execution. The processors perform the execution of sub-tasks concurrently.

4. Data-level parallelism (DLP) –

Instructions from a single stream operate concurrently on several data – Limited by non-regular data manipulation patterns and by memory bandwidth

Why parallel computing?

The whole real-world runs in dynamic nature i.e. many things happen at a certain time but at different places concurrently. This data is extensively huge to manage.

Real-world data needs more dynamic simulation and modeling, and for achieving the same, parallel computing is the key.

Parallel computing provides concurrency and saves time and money.

Complex, large datasets, and their management can be organized only and only using parallel computing’s approach.

Ensures the effective utilization of the resources. The hardware is guaranteed to be used effectively whereas in serial computation only some part of the hardware was used and the rest rendered idle.

Also, it is impractical to implement real-time systems using serial computing.

Applications of Parallel Computing:

Databases and Data mining.

Real-time simulation of systems.

Science and Engineering.

Advanced graphics, augmented reality, and virtual reality.

Limitations of Parallel Computing:

It addresses such as communication and synchronization between multiple sub-tasks and processes which is difficult to achieve.

The algorithms must be managed in such a way that they can be handled in a parallel mechanism.

The algorithms or programs must have low coupling and high cohesion. But it’s difficult to create such programs.

More technically skilled and expert programmers can code a parallelism-based program well.

Future of Parallel Computing: The computational graph has undergone a great transition from serial computing to parallel computing. Tech giant such as Intel has already taken a step towards parallel computing by employing multicore processors. Parallel computation will revolutionize the way computers work in the future, for the better good. With all the world connecting to each other even more than before, Parallel Computing does a better role in helping us stay that way. With faster networks, distributed systems, and multi-processor computers, it becomes even more necessary.

Architecture of 8086

A Microprocessor is an Integrated Circuit with all the functions of a CPU however, it cannot be used stand alone since unlike a microcontroller it has no memory or peripherals.

8086 does not have a RAM or ROM inside it. However, it has internal registers for storing intermediate and final results and interfaces with memory located outside it through the System Bus.

In case of 8086, it is a 16-bit Integer processor in a 40 pin, Dual Inline Packaged IC.

The size of the internal registers(present within the chip) indicate how much information the processor can operate on at a time (in this case 16-bit registers) and how it moves data around internally within the chip, sometimes also referred to as the internal data bus.

8086 provides the programmer with 14 internal registers, each 16 bits or 2 Bytes wide.

Memory segmentation:

To increase execution speed and fetching speed, 8086 segments the memory.

It’s 20 bit address bus can address 1MB of memory, it segments it into 16 64kB segments.

8086 works only with four 64KB segments within the whole 1MB memory.

The internal architecture of Intel 8086 is divided into 2 units: The Bus Interface Unit (BIU), and The Execution Unit (EU). These are explained as following below.

1. The Bus Interface Unit (BIU):

It provides the interface of 8086 to external memory and I/O devices via the System Bus. It performs various machine cycles such as memory read, I/O read etc. to transfer data between memory and I/O devices.

BIU performs the following functions-

It generates the 20 bit physical address for memory access.

It fetches instructions from the memory.

It transfers data to and from the memory and I/O.

Maintains the 6 byte prefetch instruction queue(supports pipelining).

BIU mainly contains the 4 Segment registers, the Instruction Pointer, a prefetch queue and an Address Generation Circuit.

Instruction Pointer (IP):

It is a 16 bit register. It holds offset of the next instructions in the Code Segment.

IP is incremented after every instruction byte is fetched.

IP gets a new value whenever a branch instruction occurs.

CS is multiplied by 10H to give the 20 bit physical address of the Code Segment.

Address of the next instruction is calculated as CS x 10H + IP.

Example: CS = 4321H IP = 1000H then CS x 10H = 43210H + offset = 44210H

This is the address of the instruction.

Code Segment register:

CS holds the base address for the Code Segment. All programs are stored in the Code Segment and accessed via the IP.

Data Segment register:

DS holds the base address for the Data Segment.

Stack Segment register:

SS holds the base address for the Stack Segment.

Extra Segment register:

ES holds the base address for the Extra Segment.

Address Generation Circuit:

The BIU has a Physical Address Generation Circuit.

It generates the 20 bit physical address using Segment and Offset addresses using the formula:

Physical Address = Segment Address x 10H + Offset Address

6 Byte Pre-fetch Queue:

It is a 6 byte queue (FIFO).

Fetching the next instruction (by BIU from CS) while executing the current instruction is called pipelining.

Gets flushed whenever a branch instruction occurs.

2. The Execution Unit (EU):

The main components of the EU are General purpose registers, the ALU, Special purpose registers, Instruction Register and Instruction Decoder and the Flag/Status Register.

Fetches instructions from the Queue in BIU, decodes and executes arithmetic and logic operations using the ALU.

Sends control signals for internal data transfer operations within the microprocessor.

Sends request signals to the BIU to access the external module.

It operates with respect to T-states (clock cycles) and not machine cycles.

8086 has four 16 bit general purpose registers AX, BX, CX and DX. Store intermediate values during execution. Each of these have two 8 bit parts (higher and lower).

AX – This is the accumulator. It is of 16 bits and is divided into two 8-bit registers AH and AL to also perform 8-bit instructions.

It is generally used for arithmetical and logical instructions but in 8086 microprocessor it is not mandatory to have accumulator as the destination operand.

Example:ADD AX, AX (AX = AX + AX)

BX – This is the base register. It is of 16 bits and is divided into two 8-bit registers BH and BL to also perform 8-bit instructions.

It is used to store the value of the offset.

Example:MOV BL, [500] (BL = 500H)

CX – This is the counter register. It is of 16 bits and is divided into two 8-bit registers CH and CL to also perform 8-bit instructions.

It is used in looping and rotation.

Example:MOV CX, 0005 LOOP

DX – This is the data register. It is of 16 bits and is divided into two 8-bit registers DH and DL to also perform 8-bit instructions.

It is used in multiplication an input/output port addressing.

Example:MUL BX (DX, AX = AX * BX)

SP – This is the stack pointer. It is of 16 bits.

It points to the topmost item of the stack. If the stack is empty the stack pointer will be (FFFE)H. It’s offset address relative to stack segment.

BP – This is the base pointer. It is of 16 bits.

It is primary used in accessing parameters passed by the stack. It’s offset address relative to stack segment.

SI – This is the source index register. It is of 16 bits.

It is used in the pointer addressing of data and as a source in some string related operations. It’s offset is relative to data segment.

DI – This is the destination index register. It is of 16 bits.

It is used in the pointer addressing of data and as a destination in some string related operations.It’s offset is relative to extra segment.

Arithmetic Logic Unit (16 bit):

Performs 8 and 16 bit arithmetic and logic operations.

Special purpose registers (16-bit):

Stack Pointer:

Points to Stack top. Stack is in Stack Segment, used during instructions like PUSH, POP, CALL, RET etc.

Base Pointer:

BP can hold offset address of any location in the stack segment. It is used to access random locations of the stack.

Source Index:

It holds offset address in Data Segment during string operations.

Destination Index:

It holds offset address in Extra Segment during string operations.

Instruction Register and Instruction Decoder:

The EU fetches an opcode from the queue into the instruction register. The instruction decoder decodes it and sends the information to the control circuit for execution.

Flag/Status register (16 bits):

It has 9 flags that help change or recognize the state of the microprocessor.

6 Status flags:

carry flag(CF)

parity flag(PF)

auxiliary carry flag(AF)

zero flag(Z)

sign flag(S)

overflow flag (O)

Status flags are updated after every arithmetic and logic operation.

3 Control flags:

trap flag(TF)

interrupt flag(IF)

direction flag(DF)

These flags can be set or reset using control instructions like CLC, STC, CLD, STD, CLI, STI, etc.

The Control flags are used to control certain operations.

UNIT 2

Processor Organization

Introduction to Parallelism

Computer software was written conventionally for serial computing. This meant that to solve a problem, an algorithm divides the problem into smaller instructions. These discrete instructions are then executed on the Central Processing Unit of a computer one by one. Only after one instruction is finished, next one starts.

A real-life example of this would be people standing in a queue waiting for a movie ticket and there is only a cashier. The cashier is giving tickets one by one to the persons. The complexity of this situation increases when there are 2 queues and only one cashier.

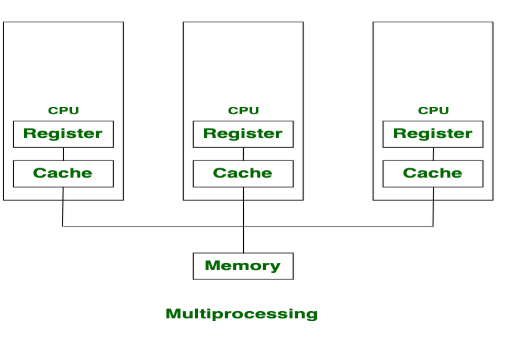

Parallel Computing :

It is the use of multiple processing elements simultaneously for solving any problem. Problems are broken down into instructions and are solved concurrently as each resource that has been applied to work is working at the same time.

Advantages of Parallel Computing over Serial Computing are as follows:

It saves time and money as many resources working together will reduce the time and cut potential costs.

It can be impractical to solve larger problems on Serial Computing.

It can take advantage of non-local resources when the local resources are finite.

Serial Computing ‘wastes’ the potential computing power, thus Parallel Computing makes better work of the hardware.

Types of Parallelism:

Bit-level parallelism –

It is the form of parallel computing which is based on the increasing processor’s size. It reduces the number of instructions that the system must execute in order to perform a task on large-sized data.

Example: Consider a scenario where an 8-bit processor must compute the sum of two 16-bit integers. It must first sum up the 8 lower-order bits, then add the 8 higher-order bits, thus requiring two instructions to perform the operation. A 16-bit processor can perform the operation with just one instruction.

Instruction-level parallelism –

A processor can only address less than one instruction for each clock cycle phase. These instructions can be re-ordered and grouped which are later on executed concurrently without affecting the result of the program. This is called instruction-level parallelism.

Task Parallelism –

Task parallelism employs the decomposition of a task into subtasks and then allocating each of the subtasks for execution. The processors perform the execution of sub-tasks concurrently.

4. Data-level parallelism (DLP) –

Instructions from a single stream operate concurrently on several data – Limited by non-regular data manipulation patterns and by memory bandwidth

Computer Arithmetic

Sign Magnitude

Sign magnitude is a very simple representation of negative numbers. In sign magnitude the first bit is dedicated to represent the sign and hence it is called sign bit.

Sign bit ‘1’ represents negative sign.

Sign bit ‘0’ represents positive sign.

In sign magnitude representation of a n – bit number, the first bit will represent sign and rest n-1 bits represent magnitude of number.

For example,

+25 = 011001

Where 11001 = 25

And 0 for ‘+’

-25 = 111001

Where 11001 = 25

And 1 for ‘-‘.

Range of number represented by sign magnitude method = -(2n-1-1) to +(2n-1-1) (for n bit number)

But there is one problem in sign magnitude and that is we have two representations of 0

+0 = 000000

– 0 = 100000

2’s complement method

To represent a negative number in this form, first we need to take the 1’s complement of the number represented in simple positive binary form and then add 1 to it.

For example:

(-8)10 = (1000)2

1’s complement of 1000 = 0111

Adding 1 to it, 0111 + 1 = 1000

So, (-8)10 = (1000)2

Please don’t get confused with (8)10 =1000 and (-8)10=1000 as with 4 bits, we can’t represent a positive number more than 7. So, 1000 is representing -8 only.

Range of number represented by 2’s complement = (-2n-1 to 2n-1 – 1)

Floating point representation of numbers

32-bit representation floating point numbers IEEE standard

Normalization

Floating point numbers are usually normalized

Exponent is adjusted so that leading bit (MSB) of mantissa is 1

Since it is always 1 there is no need to store it

Scientific notation where numbers are normalized to give a single digit before the decimal point like in decimal system e.g. 3.123 x 103

For example, we represent 3.625 in 32 bit format.

Changing 3 in binary=11

Changing .625 in binary

.625 X 2 1

.25 X 2 0

.5 X 2 1

Writing in binary exponent form

3.625=11.101 X 20

On normalizing

11.101 X 20=1.1101 X 21

On biasing exponent = 127 + 1 = 128

(128)10=(10000000) 2

For getting significand

Digits after decimal = 1101

Expanding to 23 bit = 11010000000000000000000

Setting sign bit

As it is a positive number, sign bit = 0

Finally we arrange according to representation

Sign bit exponent significand 0 10000000 11010000000000000000000

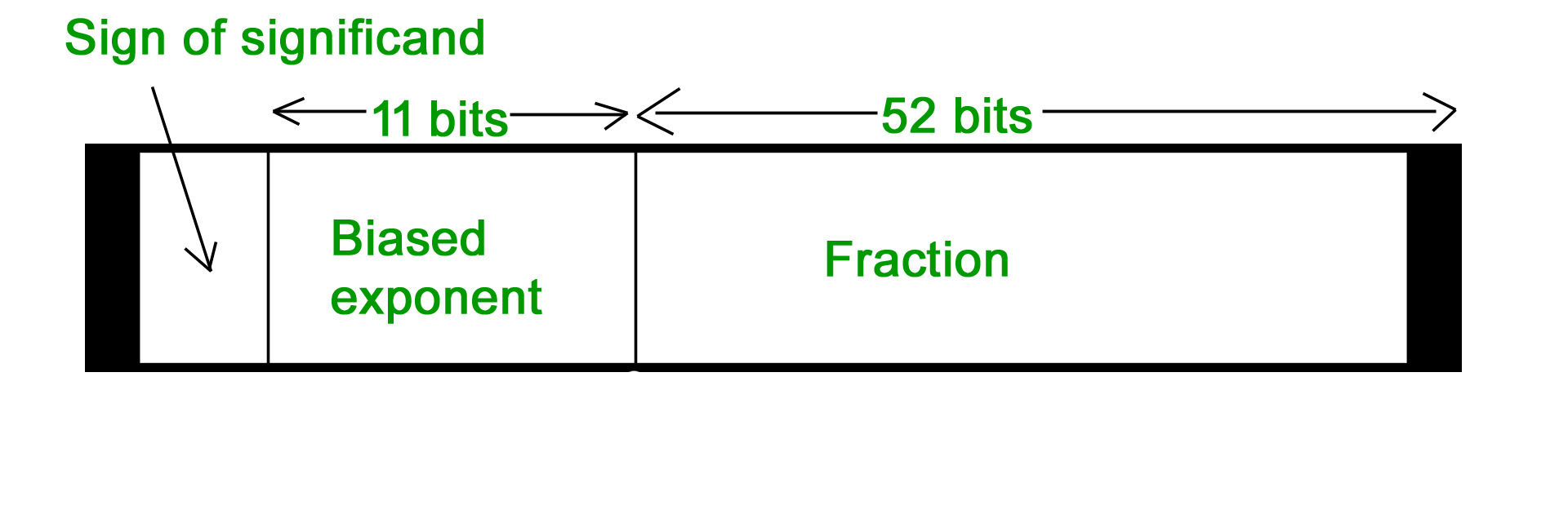

64-bit representation floating point numbers IEEE standard

Again we follow the same procedure upto normalization. After that, we add 1023 to bias the exponent.

For example, we represent -3.625 in 64 bit format.

Changing 3 in binary = 11

Changing .625 in binary.625 X 2 1 .25 X 2 0 .5 X 2 1

Writing in binary exponent form

3.625 = 11.101 X 20

On normalizing

11.101 X 20 = 1.1101 X 21

On biasing exponent 1023 + 1 = 1024

(1024)10 = (10000000000)2

So 11 bit exponent = 10000000000

52 bit significand = 110100000000 …………. making total 52 bits

Setting sign bit = 1 (number is negative)

So, final representation

1 10000000000 110100000000 …………. making total 52 bits by adding further 0’s

Converting floating point into decimal

Let’s convert a FP number into decimal

1 01111100 11000000000000000000000

Floating Point Representation

To convert the floating point into decimal, we have 3 elements in a 32-bit floating point representation:

i) Sign

ii) Exponent

iii) Mantissa

Sign bit is the first bit of the binary representation. ‘1’ implies negative number and ‘0’ implies positive number.

Example: 11000001110100000000000000000000 This is negative number.

Exponent is decided by the next 8 bits of binary representation. 127 is the unique number for 32 bit floating point representation. It is known as bias. It is determined by 2k-1 -1 where ‘k’ is the number of bits in exponent field.

There are 3 exponent bits in 8-bit representation and 8 exponent bits in 32-bit representation.

Thus

bias = 3 for 8 bit conversion (23-1 -1 = 4-1 = 3)

bias = 127 for 32 bit conversion. (28-1 -1 = 128-1 = 127)

Example: 01000001110100000000000000000000

10000011 = (131)10

131-127 = 4

Hence the exponent of 2 will be 4 i.e. 24 = 16.

Mantissa is calculated from the remaining 23 bits of the binary representation. It consists of ‘1’ and a fractional part which is determined by:

Example:

01000001110100000000000000000000

The fractional part of mantissa is given by:

1*(1/2) + 0*(1/4) + 1*(1/8) + 0*(1/16) +……… = 0.625

Thus the mantissa will be 1 + 0.625 = 1.625

Architecture of 8086

A Microprocessor is an Integrated Circuit with all the functions of a CPU however, it cannot be used stand alone since unlike a microcontroller it has no memory or peripherals.

8086 does not have a RAM or ROM inside it. However, it has internal registers for storing intermediate and final results and interfaces with memory located outside it through the System Bus.

In case of 8086, it is a 16-bit Integer processor in a 40 pin, Dual Inline Packaged IC.

The size of the internal registers(present within the chip) indicate how much information the processor can operate on at a time (in this case 16-bit registers) and how it moves data around internally within the chip, sometimes also referred to as the internal data bus.

8086 provides the programmer with 14 internal registers, each 16 bits or 2 Bytes wide.

The Bus Interface Unit (BIU):

It provides the interface of 8086 to external memory and I/O devices via the System Bus. It performs various machine cycles such as memory read, I/O read etc. to transfer data between memory and I/O devices.

BIU performs the following functions-

It generates the 20 bit physical address for memory access.

It fetches instructions from the memory.

It transfers data to and from the memory and I/O.

Maintains the 6 byte prefetch instruction queue(supports pipelining).

BIU mainly contains the 4 Segment registers, the Instruction Pointer, a prefetch queue and an Address Generation Circuit.

Instruction Pointer (IP):

It is a 16 bit register. It holds offset of the next instructions in the Code Segment.

IP is incremented after every instruction byte is fetched.

IP gets a new value whenever a branch instruction occurs.

CS is multiplied by 10H to give the 20 bit physical address of the Code Segment.

Address of the next instruction is calculated as CS x 10H + IP.

Example: CS = 4321H IP = 1000H then CS x 10H = 43210H + offset = 44210H

This is the address of the instruction.

Code Segment register:

CS holds the base address for the Code Segment. All programs are stored in the Code Segment and accessed via the IP.

Data Segment register:

DS holds the base address for the Data Segment.

Stack Segment register:

SS holds the base address for the Stack Segment.

Extra Segment register:

ES holds the base address for the Extra Segment.

Address Generation Circuit:

The BIU has a Physical Address Generation Circuit.

It generates the 20 bit physical address using Segment and Offset addresses using the formula:

Physical Address = Segment Address x 10H + Offset Address

6 Byte Pre-fetch Queue:

It is a 6 byte queue (FIFO).

Fetching the next instruction (by BIU from CS) while executing the current instruction is called pipelining.

Gets flushed whenever a branch instruction occurs.

2. The Execution Unit (EU):

The main components of the EU are General purpose registers, the ALU, Special purpose registers, Instruction Register and Instruction Decoder and the Flag/Status Register.

Fetches instructions from the Queue in BIU, decodes and executes arithmetic and logic operations using the ALU.

Sends control signals for internal data transfer operations within the microprocessor.

Sends request signals to the BIU to access the external module.

It operates with respect to T-states (clock cycles) and not machine cycles.

8086 has four 16 bit general purpose registers AX, BX, CX and DX. Store intermediate values during execution. Each of these have two 8 bit parts (higher and lower)

AX register:

It holds operands and results during multiplication and division operations. Also an accumulator during String operations.

BX register:

It holds the memory address (offset address) in indirect addressing modes.

CX register:

It holds count for instructions like loop, rotate, shift and string operations.

DX register:

It is used with AX to hold 32 bit values during multiplication and division.

Arithmetic Logic Unit (16 bit):

Performs 8 and 16 bit arithmetic and logic operations.

Special purpose registers (16-bit):

Stack Pointer:

Points to Stack top. Stack is in Stack Segment, used during instructions like PUSH, POP, CALL, RET etc.

Base Pointer:

BP can hold offset address of any location in the stack segment. It is used to access random locations of the stack.

Source Index:

It holds offset address in Data Segment during string operations.

Destination Index:

It holds offset address in Extra Segment during string operations.

Instruction Register and Instruction Decoder:

The EU fetches an opcode from the queue into the instruction register. The instruction decoder decodes it and sends the information to the control circuit for execution.

Flag/Status register (16 bits):

It has 9 flags that help change or recognize the state of the microprocessor.

6 Status flags:

carry flag(CF)

parity flag(PF)

auxiliary carry flag(AF)

zero flag(Z)

sign flag(S)

overflow flag (O)

Status flags are updated after every arithmetic and logic operation.

3 Control flags:

trap flag(TF)

interrupt flag(IF)

direction flag(DF)

These flags can be set or reset using control instructions like CLC, STC, CLD, STD, CLI, STI, etc.

The Control flags are used to control certain operations.

Register Organization

Register organization is the arrangement of the registers in the processor. The processor designers decide the organization of the registers in a processor. Different processors may have different register organization. Depending on the roles played by the registers they can be categorized into two types, user-visible register and control and status register

What is Register?

Registers are the smaller and the fastest accessible memory units in the central processing unit (CPU). According to memory hierarchy, the registers in the processor, function a level above the main memory and cache memory. The registers used by the central unit are also called as processor registers.

A register can hold the instruction, address location, or operands. Sometimes, the instruction has register as a part of itself.

Types of Registers

As we have discussed above, registers can be organized into two main categories i.e. the User-Visible Registers and the Control and Status Registers. Although we can’t separate the registers in the processors clearly among these two categories.

This is because in some processors, a register may be user-visible and in some, the same may not be user-visible. But for our rest of discussion regarding register organization, we will consider these two categories of register.

User Visible Registers

General Purpose Register

Data Register

Address Register

Condition Codes

Control and Status Registers

Program Counter

Instruction Register

Memory Address Register

Memory Buffer Register

User-Visible Registers

These registers are visible to the assembly or machine language programmers and they use them effectively to minimize the memory references in the instructions. Well, these registers can only be referenced using the machine or assembly language.

The registers that fall in this category are discussed below:

1. General Purpose Register

The general-purpose registers detain both the addresses or the data. Although we have separate data registers and address registers. The general purpose register also accepts the intermediate results in the course of program execution.

Well, the programmers can restrict some of the general-purpose registers to specific functions. Like, some registers are specifically used for stack operations or for floating-point operations. The general-purpose register can also be employed for the addressing functions.

2. Data Register

The term itself describes that these registers are employed to hold the data. But the programmers can’t use these registers for calculating operand address.

3. Address Register

Now, the address registers contain the address of an operand or it can also act as a general-purpose register. An address register may be dedicated to a certain addressing mode. Let us understand this with the examples.

(a) Segment Pointer Register

A memory divided in segments, requires a segment register to hold the base address of the segment. There can be multiple segment registers. As one segment register can be employed to hold the base address of the segment occupied by the operating system. The other segment register can hold the base address of the segment allotted to the processor.

(b) Index Register

The index register is employed for indexed addressing and it is initial value is 0. Generally, it used for traversing the memory locations. After each reference, the index register is incremented or decremented by 1, depending upon the nature of the operation.

Sometime the index register may be auto indexed.

(c) Stack Pointer Register

The stack register has the address that points the stack top.

4. Condition Code

Condition codes are the flag bits which are the part of the control register. The condition codes are set by the processor as a result of an operation and they are implicitly read through the machine instruction.

The programmers are not allowed to alter the conditional codes. Generally, the condition codes are tested during conditional branch operation.

Control and Status Registers

The control and status register holds the address or data that is important to control the processor’s operation. The most important thing is that these registers are not visible to the users. Below we will discuss all the control and status registers are essential for the execution of an instruction.

1. Program Counter

The program counter is a processor register that holds the address of the instruction that has to be executed next. It is a processor which updates the program counter with the address of the next instruction to be fetched for execution.

2. Instruction Register

Instruction register has the instruction that is currently fetched. It helps in analyzing the opcode and operand present in the instruction.

3. Memory Address Register (MAR)

Memory address register holds the address of a memory location.

4. Memory Buffer Register (MBR)

The memory buffer register holds the data that has to be written to a memory location or it holds the data that is recently been read.

The memory address registers (MAR) and memory buffer registers (MBR) are used to move the data between processor and memory.

A part from the above registers, several processors have a register termed as Program Status Word (PSW). As the word suggests it contains the status information.

The fields included in Program Status Word (PSW):

Sign: This field has the resultant sign bit of the last arithmetic operation performed.

Zero: This field is set when the result of the operation is zero.

Carry: This field is set when an arithmetic operation results in a carry into or borrow out.

Equal: If a logical operation results in, equality the Equal bit is set.

Overflow: This bit indicates the arithmetic overflow.

Interrupt: This bit is set to enable or disable the interrupts.

Supervisor: This bit indicates whether the processor is executing in the supervisor mode or the user mode.

So, these are the types of registers a processor has. The processor designer organizes the registers according to the requirement of the processor.

Addressing Modes

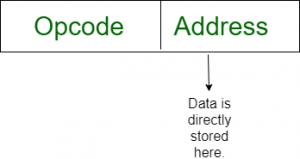

Addressing Modes– The term addressing modes refers to the way in which the operand of an instruction is specified. The addressing mode specifies a rule for interpreting or modifying the address field of the instruction before the operand is actually executed.

Addressing modes for 8086 instructions are divided into two categories:

Addressing modes for data

2) Addressing modes for branch

The 8086 memory addressing modes provide flexible access to memory, allowing you to easily access variables, arrays, records, pointers, and other complex data types. The key to good assembly language programming is the proper use of memory addressing modes.

An assembly language program instruction consists of two parts

The memory address of an operand consists of two components:

IMPORTANT TERMS

Starting address of memory segment.

Effective address or Offset: An offset is determined by adding any combination of three address elements: displacement, base and index.

Displacement: It is an 8 bit or 16 bit immediate value given in the instruction.

Base: Contents of base register, BX or BP.

Index: Content of index register SI or DI.

According to different ways of specifying an operand by 8086 microprocessor, different addressing modes are used by 8086.

Addressing modes used by 8086 microprocessor are discussed below:

Implied mode:: In implied addressing the operand is specified in the instruction itself. In this mode the data is 8 bits or 16 bits long and data is the part of instruction. Zero address instruction are designed with implied addressing mode.

Example: CLC (used to reset Carry flag to 0)

Immediate addressing mode (symbol #):In this mode data is present in address field of instruction .Designed like one address instruction format.

Note:Limitation in the immediate mode is that the range of constants are restricted by size of address field.

Example: MOV AL, 35H (move the data 35H into AL register)

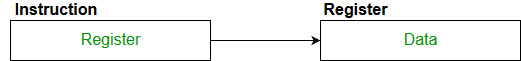

Example: MOV AL, 35H (move the data 35H into AL register)Register mode: In register addressing the operand is placed in one of 8 bit or 16 bit general purpose registers. The data is in the register that is specified by the instruction.

Here one register reference is required to access the data.

Example: MOV AX,CX (move the contents of CX register to AX register)

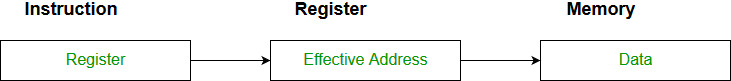

Example: MOV AX,CX (move the contents of CX register to AX register)Register Indirect mode: In this addressing the operand’s offset is placed in any one of the registers BX,BP,SI,DI as specified in the instruction. The effective address of the data is in the base register or an index register that is specified by the instruction.

Here two register reference is required to access the data.

The 8086 CPUs let you access memory indirectly through a register using the register indirect addressing modes.MOV AX, [BX](move the contents of memory location s addressed by the register BX to the register AX)

Auto Indexed (increment mode): Effective address of the operand is the contents of a register specified in the instruction. After accessing the operand, the contents of this register are automatically incremented to point to the next consecutive memory location.(R1)+.

Here one register reference,one memory reference and one ALU operation is required to access the data.

Example:Add R1, (R2)+ // OR R1 = R1 +M[R2] R2 = R2 + d

Useful for stepping through arrays in a loop. R2 – start of array d – size of an element

Indirect addressing Mode (symbol @ or () ):In this mode address field of instruction contains the address of effective address. Here two references are required.

1st reference to get effective address.

2nd reference to access the data.

Based on the availability of Effective address, Indirect mode is of two kind:

Register Indirect: In this mode effective address is in the register, and corresponding register name will be maintained in the address field of an instruction.

Here one register reference, one memory reference is required to access the data.

Memory Indirect: In this mode effective address is in the memory, and corresponding memory address will be maintained in the address field of an instruction.

Here two memory reference is required to access the data.

Indexed addressing mode: The operand’s offset is the sum of the content of an index register SI or DI and an 8 bit or 16 bit displacement.Example:MOV AX, [SI +05]

Based Indexed Addressing: The operand’s offset is sum of the content of a base register BX or BP and an index register SI or DI.Example: ADD AX, [BX+SI]

Based on Transfer of control, addressing modes are:

PC relative addressing mode: PC relative addressing mode is used to implement intra segment transfer of control, In this mode effective address is obtained by adding displacement to PC.EA= PC + Address field value PC= PC + Relative value.

Base register addressing mode:Base register addressing mode is used to implement inter segment transfer of control.In this mode effective address is obtained by adding base register value to address field value.EA= Base register + Address field value. PC= Base register + Relative value.

Note:

PC relative nad based register both addressing modes are suitable for program relocation at runtime.

Based register addressing mode is best suitable to write position independent codes.

Advantages of Addressing Modes

To give programmers to facilities such as Pointers, counters for loop controls, indexing of data and program relocation.

To reduce the number bits in the addressing field of the Instruction.

Micro-Operation

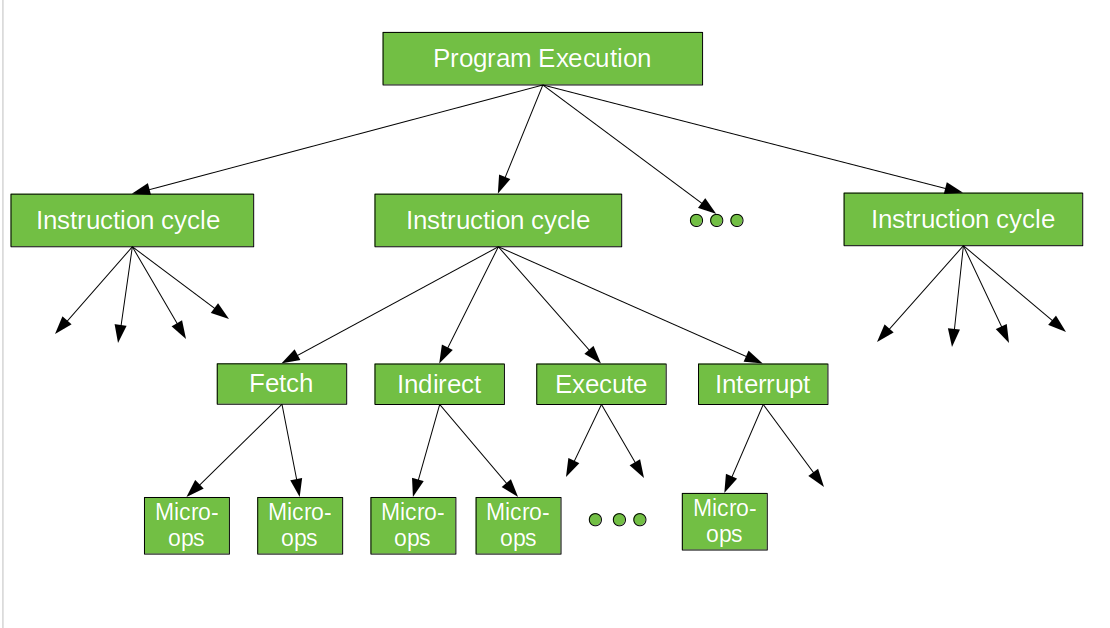

In computer central processing units, micro-operations (also known as micro-ops) are the functional or atomic, operations of a processor. These are low level instructions used in some designs to implement complex machine instructions. They generally perform operations on data stored in one or more registers. They transfer data between registers or between external buses of the CPU, also performs arithmetic and logical operations on registers.

In executing a program, operation of a computer consists of a sequence of instruction cycles, with one machine instruction per cycle. Each instruction cycle is made up of a number of smaller units – Fetch, Indirect, Execute and Interrupt cycles. Each of these cycles involves series of steps, each of which involves the processor registers. These steps are referred as micro-operations. the prefix micro refers to the fact that each of the step is very simple and accomplishes very little. Figure below depicts the concept being discussed here.

RISC and CISC

Reduced Instruction Set Architecture (RISC) –

The main idea behind is to make hardware simpler by using an instruction set composed of a few basic steps for loading, evaluating, and storing operations just like a load command will load data, store command will store the data.

Complex Instruction Set Architecture (CISC) –

The main idea is that a single instruction will do all loading, evaluating, and storing operations just like a multiplication command will do stuff like loading data, evaluating, and storing it, hence it’s complex.

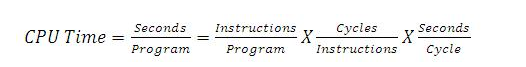

RISC: Reduce the cycles per instruction at the cost of the number of instructions per program.

CISC: The CISC approach attempts to minimize the number of instructions per program but at the cost of increase in number of cycles per instruction.

Earlier when programming was done using assembly language, a need was felt to make instruction do more tasks because programming in assembly was tedious and error-prone due to which CISC architecture evolved but with the uprise of high-level language dependency on assembly reduced RISC architecture prevailed.

Characteristic of RISC –

Simpler instruction, hence simple instruction decoding.

Instruction comes undersize of one word.

Instruction takes a single clock cycle to get executed.

More general-purpose registers.

Simple Addressing Modes.

Less Data types.

Pipeline can be achieved.

Characteristic of CISC –

Complex instruction, hence complex instruction decoding.

Instructions are larger than one-word size.

Instruction may take more than a single clock cycle to get executed.

Less number of general-purpose registers as operation get performed in memory itself.

Complex Addressing Modes.

More Data types.

RISCCISCFocus on software Focus on hardware

Uses only Hardwired control unit Uses both hardwired and micro programmed control unit

Transistors are used for more registers Transistors are used for storing complex

Instructions

Fixed sized instructions Variable sized instructions

Can perform only Register to Register Arithmetic operations Can perform REG to REG or REG to MEM or MEM to MEM

Requires more number of registers Requires less number of registers

Code size is large Code size is small

An instruction execute in a single clock cycle Instruction takes more than one clock cycle

An instruction fit in one word Instructions are larger than the size of one word

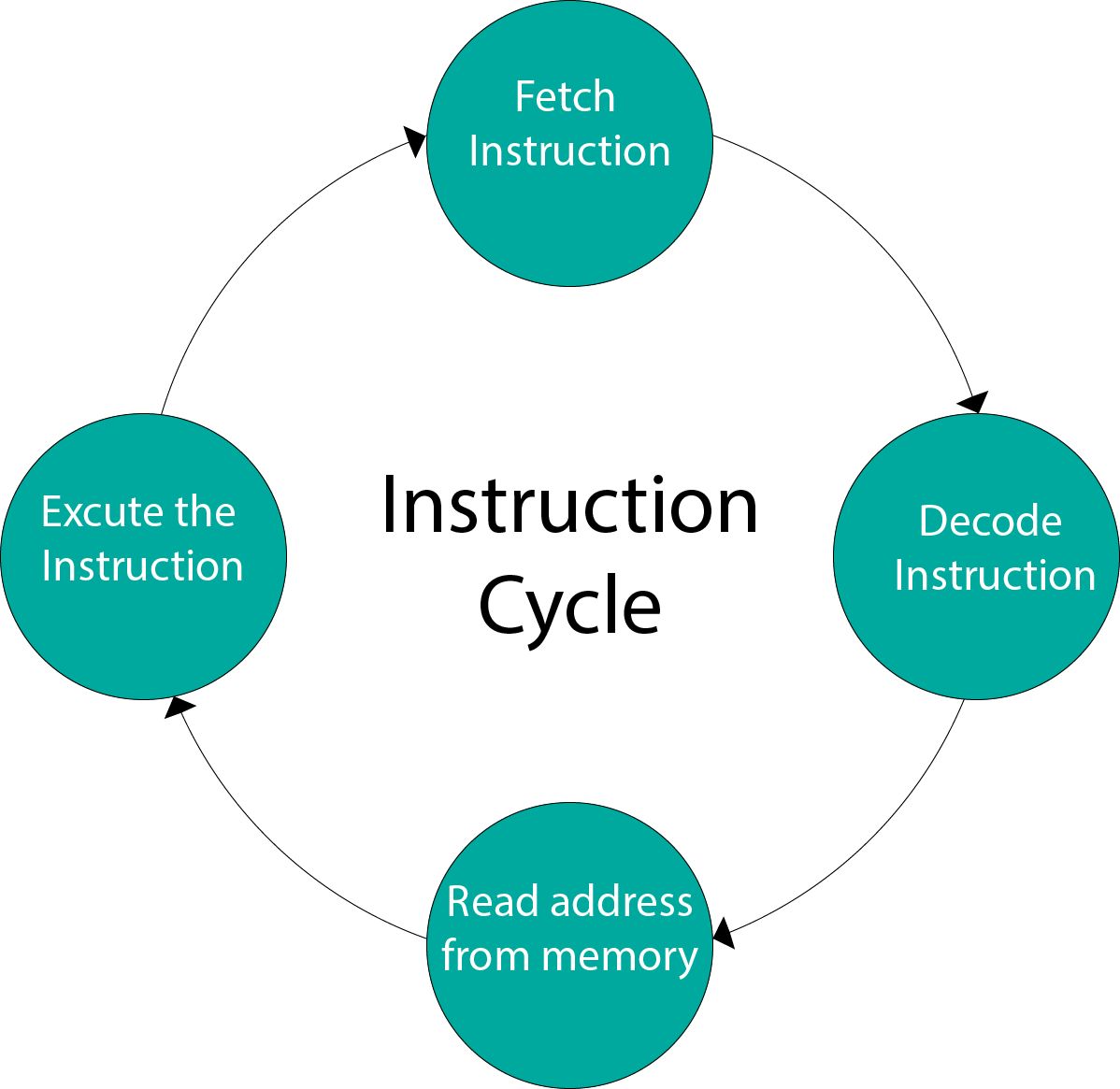

Instruction Cycle

A program residing in the memory unit of a computer consists of a sequence of instructions. These instructions are executed by the processor by going through a cycle for each instruction.

In a basic computer, each instruction cycle consists of the following phases:

Fetch instruction from memory.

Decode the instruction.

Read the effective address from memory.

Execute the instruction.

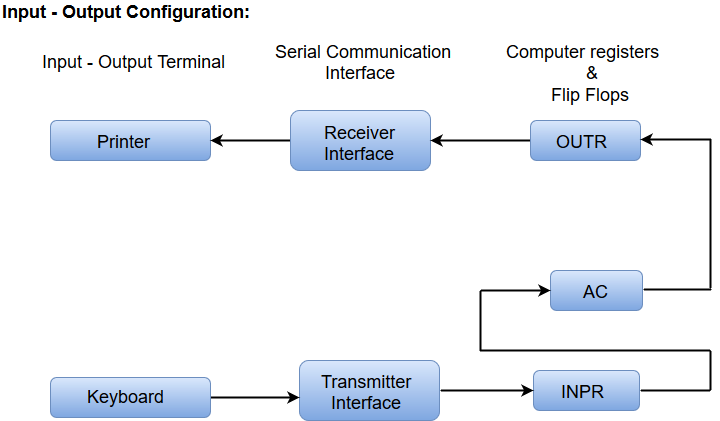

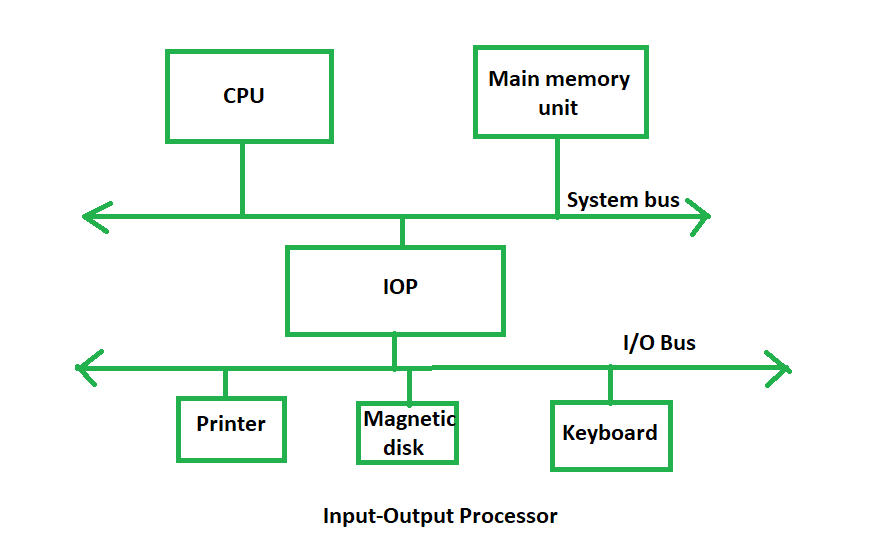

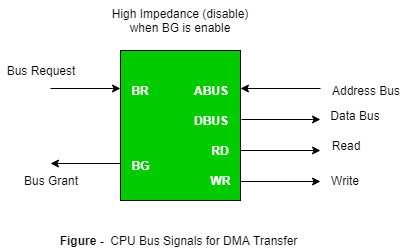

Input-Output Configuration

In computer architecture, input-output devices act as an interface between the machine and the user.

Instructions and data stored in the memory must come from some input device. The results are displayed to the user through some output device.

The following block diagram shows the input-output configuration for a basic computer.

The input-output terminals send and receive information.

The amount of information transferred will always have eight bits of an alphanumeric code.

The information generated through the keyboard is shifted into an input register 'INPR'.

The information for the printer is stored in the output register 'OUTR'.

Registers INPR and OUTR communicate with a communication interface serially and with the AC in parallel.

The transmitter interface receives information from the keyboard and transmits it to INPR.

The receiver interface receives information from OUTR and sends it to the printer serially.

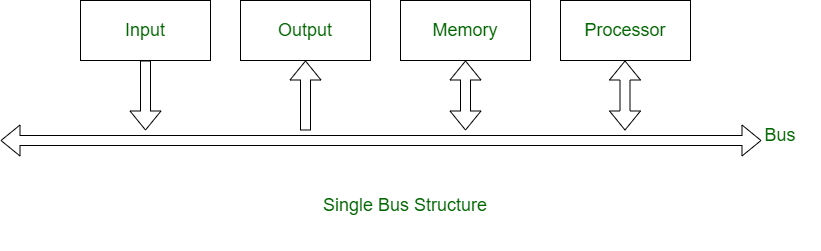

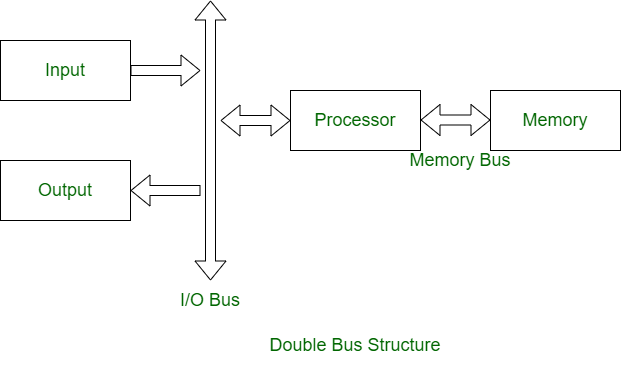

Single Bus Structure and Double Bus Structure

1. Single Bus Structure :

In single bus structure, one common bus used to communicate between peripherals and microprocessor. It has disadvantages due to use of one common bus.

2. Double Bus Structure :

In double bus structure, one bus is used to fetch instruction while other is used to fetch data, required for execution. It is to overcome the bottleneck of single bus structure.

Differences between Single Bus and Double Bus Structure :

Single Bus StructureDouble Bus StructureOne common bus is used for communication between peripherals and processor. Two buses are used, one for communication from peripherals and other for processor.

Instructions and data both are transferred in same bus. Instructions and data both are transferred in different buses.

Its performance is low. Its performance is high.

Cost of single bus structure is low. Cost of double bus structure is high.

Number of cycles for execution is more. Number of cycles for execution is less.

Execution of process is slow. Execution of process is fast.

Number of registers associated are less. Number of registers associated are more.

At a time single operand can be read from bus. At a time two operands can be read.

Three-Bus Organization I

n a three-bus organization, two buses may be used as source buses while the third is used as destination. The source buses move data out of registers (out-bus), and the destination bus may move data into a register (in-bus). Each of the two outbuses is connected to an ALU input point. The output of the ALU is connected directly to the in-bus. As can be expected, the more buses we have, the more data we can move within a single clock cycle. However, increasing the number of buses will also increase the complexity of the hardware. Figure (8.3) shows an example of a three-bus datapath.

Branch Instruction

A branch is an instruction in a computer program that can cause a computer to begin executing a different instruction sequence and thus deviate from its default behaviour of executing instructions in order. Branch may also refer to the act of switching execution to a different instruction sequence as a result of executing a branch instruction. Branch instructions are used to implement control flow in program loops and conditionals (i.e., executing a particular sequence of instructions only if certain conditions are satisfied).