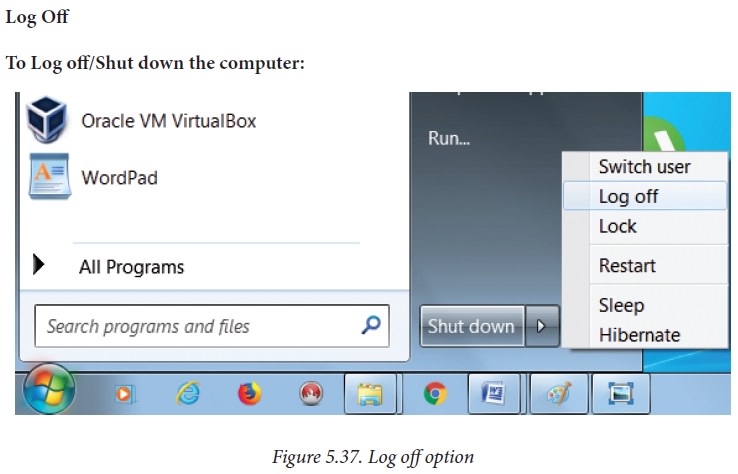

BCA 3RD SEM OS NOTES 2023-24

UNIT 1

Time Sharing Operating System

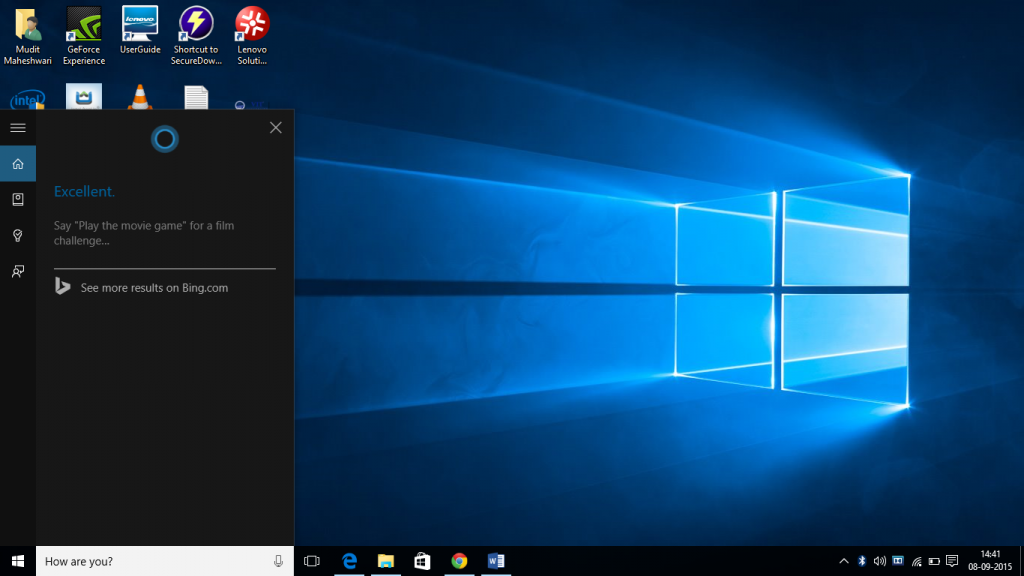

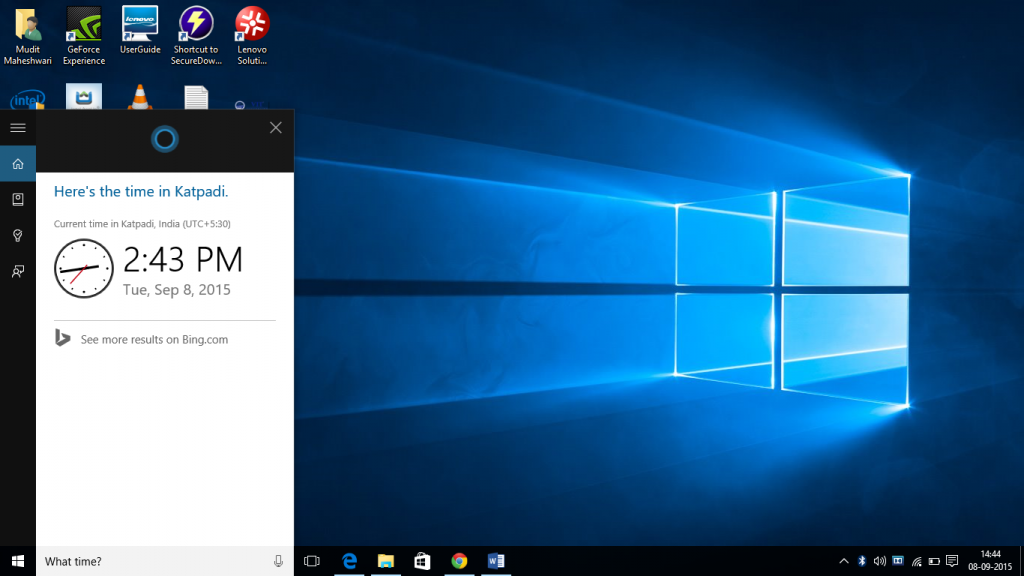

Multiprogrammed, batched systems provided an environment where various system resources were used effectively, but it did not provide for user interaction with computer systems. Time sharing is a logical extension of multiprogramming. The CPU performs many tasks by switches are so frequent that the user can interact with each program while it is running.

A time shared operating system allows multiple users to share computers simultaneously. Each action or order at a time the shared system becomes smaller, so only a little CPU time is required for each user. As the system rapidly switches from one user to another, each user is given the impression that the entire computer system is dedicated to its use, although it is being shared among multiple users.

Attention reader! Don’t stop learning now. Get hold of all the important CS Theory concepts for SDE interviews with the CS Theory Course at a student-friendly price and become industry ready.

A time shared operating system uses CPU scheduling and multi-programming to provide each with a small portion of a shared computer at once. Each user has at least one separate program in memory. A program loaded into memory and executes, it performs a short period of time either before completion or to complete I/O.This short period of time during which user gets attention of CPU is known as time slice, time slot or quantum.It is typically of the order of 10 to 100 milliseconds. Time shared operating systems are more complex than multiprogrammed operating systems. In both, multiple jobs must be kept in memory simultaneously, so the system must have memory management and security. To achieve a good response time, jobs may have to swap in and out of disk from main memory which now serves as a backing store for main memory. A common method to achieve this goal is virtual memory, a technique that allows the execution of a job that may not be completely in memory.

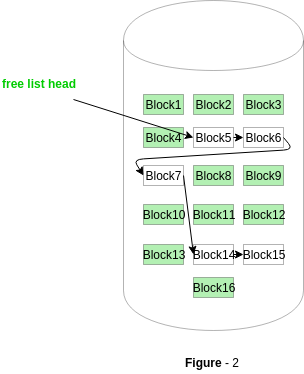

In above figure the user 5 is active state but user 1, user 2, user 3, and user 4 are in waiting state whereas user 6 is in ready state.

1. Active State –

The user’s program is under the control of CPU. Only one program is available in this state.

2. Ready State –

The user program is ready to execute but it is waiting for for it’s turn to get the CPU.More than one user can be in ready state at a time.

3. Waiting State –

The user’s program is waiting for some input/output operation. More than one user can be in a waiting state at a time.

Requirements of Time Sharing Operating System :

An alarm clock mechanism to send an interrupt signal to the CPU after every time slice. Memory Protection mechanism to prevent one job’s instructions and data from interfering with other jobs.

Advantages :

1. Each task gets an equal opportunity.

2. Less chances of duplication of software.

3. CPU idle time can be reduced.

Disadvantages :

1. Reliability problem.

2. One must have to take of security and integrity of user programs and data.

3. Data communication problem.

What is Parallel Processing ?

For the purpose of increasing the computational speed of computer system, the term ‘parallel processing‘ employed to give simultaneous data-processing operations is used to represent a large class. In addition, a parallel processing system is capable of concurrent data processing to achieve faster execution times.

As an example, the next instruction can be read from memory, while an instruction is being executed in ALU. The system can have two or more ALUs and be able to execute two or more instructions at the same time. In addition, two or more processing is also used to speed up computer processing capacity and increases with parallel processing, and with it, the cost of the system increases. But, technological development has reduced hardware costs to the point where parallel processing methods are economically possible.

Attention reader! Don’t stop learning now. Get hold of all the important CS Theory concepts for SDE interviews with the CS Theory Course at a student-friendly price and become industry ready.

Parallel processing derives from multiple levels of complexity. It is distinguished between parallel and serial operations by the type of registers used at the lowest level. Shift registers work one bit at a time in a serial fashion, while parallel registers work simultaneously with all bits of simultaneously with all bits of the word. At high levels of complexity, parallel processing derives from having a plurality of functional units that perform separate or similar operations simultaneously. By distributing data among several functional units, parallel processing is installed.

Types of Operating Systems

An Operating System performs all the basic tasks like managing files, processes, and memory. Thus operating system acts as the manager of all the resources, i.e. resource manager. Thus, the operating system becomes an interface between user and machine.

Types of Operating Systems: Some widely used operating systems are as follows-

Attention reader! All those who say programming isn't for kids, just haven't met the right mentors yet. Join the Demo Class for First Step to Coding Course, specifically designed for students of class 8 to 12.

The students will get to learn more about the world of programming in these free classes which will definitely help them in making a wise career choice in the future.

1. Batch Operating System –

This type of operating system does not interact with the computer directly. There is an operator which takes similar jobs having the same requirement and group them into batches. It is the responsibility of the operator to sort jobs with similar needs.

Advantages of Batch Operating System:

· It is very difficult to guess or know the time required for any job to complete. Processors of the batch systems know how long the job would be when it is in queue

· Multiple users can share the batch systems

· The idle time for the batch system is very less

· It is easy to manage large work repeatedly in batch systems

Disadvantages of Batch Operating System:

· The computer operators should be well known with batch systems

· Batch systems are hard to debug

· It is sometimes costly

· The other jobs will have to wait for an unknown time if any job fails

Examples of Batch based Operating System: Payroll System, Bank Statements, etc.

2. Time-Sharing Operating Systems –

Each task is given some time to execute so that all the tasks work smoothly. Each user gets the time of CPU as they use a single system. These systems are also known as Multitasking Systems. The task can be from a single user or different users also. The time that each task gets to execute is called quantum. After this time interval is over OS switches over to the next task.

Advantages of Time-Sharing OS:

· Each task gets an equal opportunity

· Fewer chances of duplication of software

· CPU idle time can be reduced

Disadvantages of Time-Sharing OS:

· Reliability problem

· One must have to take care of the security and integrity of user programs and data

· Data communication problem

Examples of Time-Sharing OSs are: Multics, Unix, etc.

3. Distributed Operating System –

These types of the operating system is a recent advancement in the world of computer technology and are being widely accepted all over the world and, that too, with a great pace. Various autonomous interconnected computers communicate with each other using a shared communication network. Independent systems possess their own memory unit and CPU. These are referred to as loosely coupled systems or distributed systems. These system’s processors differ in size and function. The major benefit of working with these types of the operating system is that it is always possible that one user can access the files or software which are not actually present on his system but some other system connected within this network i.e., remote access is enabled within the devices connected in that network.

Advantages of Distributed Operating System:

· Failure of one will not affect the other network communication, as all systems are independent from each other

· Electronic mail increases the data exchange speed

· Since resources are being shared, computation is highly fast and durable

· Load on host computer reduces

· These systems are easily scalable as many systems can be easily added to the network

· Delay in data processing reduces

Disadvantages of Distributed Operating System:

· Failure of the main network will stop the entire communication

· To establish distributed systems the language which is used are not well defined yet

· These types of systems are not readily available as they are very expensive. Not only that the underlying software is highly complex and not understood well yet

Examples of Distributed Operating System are- LOCUS, etc.

4. Network Operating System –

These systems run on a server and provide the capability to manage data, users, groups, security, applications, and other networking functions. These types of operating systems allow shared access of files, printers, security, applications, and other networking functions over a small private network. One more important aspect of Network Operating Systems is that all the users are well aware of the underlying configuration, of all other users within the network, their individual connections, etc. and that’s why these computers are popularly known as tightly coupled systems.

Advantages of Network Operating System:

· Highly stable centralized servers

· Security concerns are handled through servers

· New technologies and hardware up-gradation are easily integrated into the system

· Server access is possible remotely from different locations and types of systems

Disadvantages of Network Operating System:

· Servers are costly

· User has to depend on a central location for most operations

· Maintenance and updates are required regularly

Examples of Network Operating System are: Microsoft Windows Server 2003, Microsoft Windows Server 2008, UNIX, Linux, Mac OS X, Novell NetWare, and BSD, etc.

5. Real-Time Operating System –

These types of OSs serve real-time systems. The time interval required to process and respond to inputs is very small. This time interval is called response time.

Real-time systems are used when there are time requirements that are very strict like missile systems, air traffic control systems, robots, etc.

Two types of Real-Time Operating System which are as follows:

· Hard Real-Time Systems:

These OSs are meant for applications where time constraints are very strict and even the shortest possible delay is not acceptable. These systems are built for saving life like automatic parachutes or airbags which are required to be readily available in case of any accident. Virtual memory is rarely found in these systems.

· Soft Real-Time Systems:

These OSs are for applications where for time-constraint is less strict.

Advantages of RTOS:

· Maximum Consumption: Maximum utilization of devices and system, thus more output from all the resources

· Task Shifting: The time assigned for shifting tasks in these systems are very less. For example, in older systems, it takes about 10 microseconds in shifting one task to another, and in the latest systems, it takes 3 microseconds.

· Focus on Application: Focus on running applications and less importance to applications which are in the queue.

· Real-time operating system in the embedded system: Since the size of programs are small, RTOS can also be used in embedded systems like in transport and others.

· Error Free: These types of systems are error-free.

· Memory Allocation: Memory allocation is best managed in these types of systems.

Disadvantages of RTOS:

· Limited Tasks: Very few tasks run at the same time and their concentration is very less on few applications to avoid errors.

· Use heavy system resources: Sometimes the system resources are not so good and they are expensive as well.

· Complex Algorithms: The algorithms are very complex and difficult for the designer to write on.

· Device driver and interrupt signals: It needs specific device drivers and interrupts signals to respond earliest to interrupts.

· Thread Priority: It is not good to set thread priority as these systems are very less prone to switching tasks.

Examples of Real-Time Operating Systems are: Scientific experiments, medical imaging systems, industrial control systems, weapon systems, robots, air traffic control systems, etc.

.

Memory Organisation in Computer Architecture

The memory is organized in the form of a cell, each cell is able to be identified with a unique number called address. Each cell is able to recognize control signals such as “read” and “write”, generated by CPU when it wants to read or write address. Whenever CPU executes the program there is a need to transfer the instruction from the memory to CPU because the program is available in memory. To access the instruction CPU generates the memory request

Memory Management in Operating System

The term Memory can be defined as a collection of data in a specific format. It is used to store instructions and processed data. The memory comprises a large array or group of words or bytes, each with its own location. The primary motive of a computer system is to execute programs. These programs, along with the information they access, should be in the main memory during execution. The CPU fetches instructions from memory according to the value of the program counter.

To achieve a degree of multiprogramming and proper utilization of memory, memory management is important. Many memory management methods exist, reflecting various approaches, and the effectiveness of each algorithm depends on the situation.

What is Main Memory:

The main memory is central to the operation of a modern computer. Main Memory is a large array of words or bytes, ranging in size from hundreds of thousands to billions. Main memory is a repository of rapidly available information shared by the CPU and I/O devices. Main memory is the place where programs and information are kept when the processor is effectively utilizing them. Main memory is associated with the processor, so moving instructions and information into and out of the processor is extremely fast. Main memory is also known as RAM(Random Access Memory). This memory is a volatile memory.RAM lost its data when a power interruption occurs.

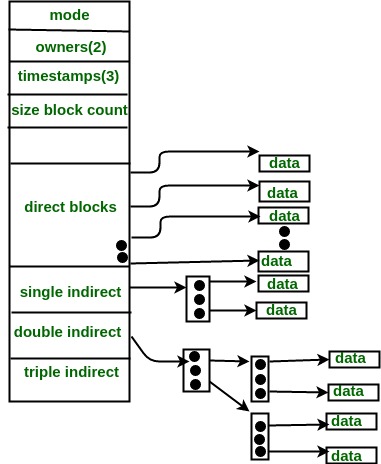

Figure 1: Memory hierarchy

What is Memory Management :

In a multiprogramming computer, the operating system resides in a part of memory and the rest is used by multiple processes. The task of subdividing the memory among different processes is called memory management. Memory management is a method in the operating system to manage operations between main memory and disk during process execution. The main aim of memory management is to achieve efficient utilization of memory.

Why Memory Management is required:

Allocate and de-allocate memory before and after process execution.

To keep track of used memory space by processes.

To minimize fragmentation issues.

To proper utilization of main memory.

To maintain data integrity while executing of process.

Now we are discussing the concept of logical address space and Physical address space:

Logical and Physical Address Space:

Logical Address space: An address generated by the CPU is known as “Logical Address”. It is also known as a Virtual address. Logical address space can be defined as the size of the process. A logical address can be changed.

Physical Address space: An address seen by the memory unit (i.e the one loaded into the memory address register of the memory) is commonly known as a “Physical Address”. A Physical address is also known as a Real address. The set of all physical addresses corresponding to these logical addresses is known as Physical address space. A physical address is computed by MMU. The run-time mapping from virtual to physical addresses is done by a hardware device Memory Management Unit(MMU). The physical address always remains constant.

Static and Dynamic Loading:

To load a process into the main memory is done by a loader. There are two different types of loading :

Static loading:- loading the entire program into a fixed address. It requires more memory space.

Dynamic loading:- The entire program and all data of a process must be in physical memory for the process to execute. So, the size of a process is limited to the size of physical memory. To gain proper memory utilization, dynamic loading is used. In dynamic loading, a routine is not loaded until it is called. All routines are residing on disk in a relocatable load format. One of the advantages of dynamic loading is that unused routine is never loaded. This loading is useful when a large amount of code is needed to handle it efficiently.

Static and Dynamic linking:

To perform a linking task a linker is used. A linker is a program that takes one or more object files generated by a compiler and combines them into a single executable file.

Static linking: In static linking, the linker combines all necessary program modules into a single executable program. So there is no runtime dependency. Some operating systems support only static linking, in which system language libraries are treated like any other object module.

Dynamic linking: The basic concept of dynamic linking is similar to dynamic loading. In dynamic linking, “Stub” is included for each appropriate library routine reference. A stub is a small piece of code. When the stub is executed, it checks whether the needed routine is already in memory or not. If not available then the program loads the routine into memory.

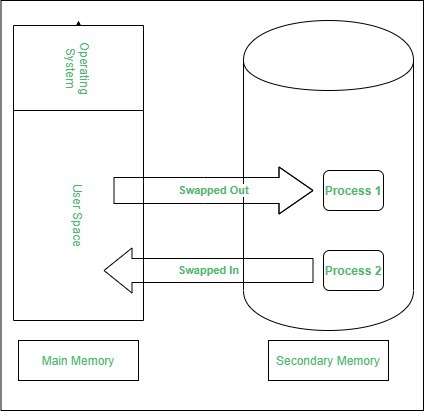

Swapping :

When a process is executed it must have resided in memory. Swapping is a process of swap a process temporarily into a secondary memory from the main memory, which is fast as compared to secondary memory. A swapping allows more processes to be run and can be fit into memory at one time. The main part of swapping is transferred time and the total time directly proportional to the amount of memory swapped. Swapping is also known as roll-out, roll in, because if a higher priority process arrives and wants service, the memory manager can swap out the lower priority process and then load and execute the higher priority process. After finishing higher priority work, the lower priority process swapped back in memory and continued to the execution process.

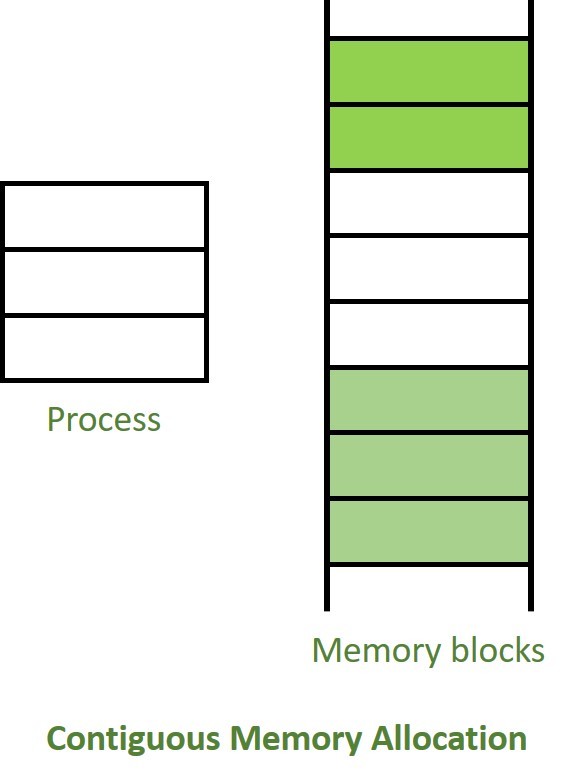

Contiguous Memory Allocation :

The main memory should oblige both the operating system and the different client processes. Therefore, the allocation of memory becomes an important task in the operating system. The memory is usually divided into two partitions: one for the resident operating system and one for the user processes. We normally need several user processes to reside in memory simultaneously. Therefore, we need to consider how to allocate available memory to the processes that are in the input queue waiting to be brought into memory. In adjacent memory allotment, each process is contained in a single contiguous segment of memory.

Memory allocation:

To gain proper memory utilization, memory allocation must be allocated efficient manner. One of the simplest methods for allocating memory is to divide memory into several fixed-sized partitions and each partition contains exactly one process. Thus, the degree of multiprogramming is obtained by the number of partitions.

Multiple partition allocation: In this method, a process is selected from the input queue and loaded into the free partition. When the process terminates, the partition becomes available for other processes.

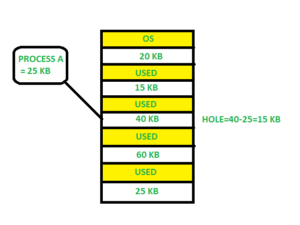

Fixed partition allocation: In this method, the operating system maintains a table that indicates which parts of memory are available and which are occupied by processes. Initially, all memory is available for user processes and is considered one large block of available memory. This available memory is known as “Hole”. When the process arrives and needs memory, we search for a hole that is large enough to store this process. If the requirement fulfills then we allocate memory to process, otherwise keeping the rest available to satisfy future requests. While allocating a memory sometimes dynamic storage allocation problems occur, which concerns how to satisfy a request of size n from a list of free holes. There are some solutions to this problem:

First fit:-

In the first fit, the first available free hole fulfills the requirement of the process allocated.

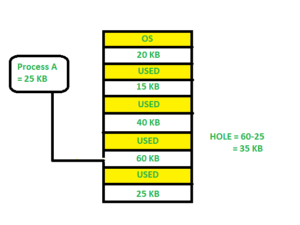

Here, in this diagram 40 KB memory block is the first available free hole that can store process A (size of 25 KB), because the first two blocks did not have sufficient memory space.

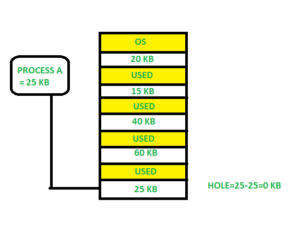

Best fit:-

In the best fit, allocate the smallest hole that is big enough to process requirements. For this, we search the entire list, unless the list is ordered by size.

Here in this example, first, we traverse the complete list and find the last hole 25KB is the best suitable hole for Process A(size 25KB).

In this method memory utilization is maximum as compared to other memory allocation techniques.

Worst fit:-In the worst fit, allocate the largest available hole to process. This method produces the largest leftover hole.

Here in this example, Process A (Size 25 KB) is allocated to the largest available memory block which is 60KB. Inefficient memory utilization is a major issue in the worst fit.

Fragmentation:

A Fragmentation is defined as when the process is loaded and removed after execution from memory, it creates a small free hole. These holes can not be assigned to new processes because holes are not combined or do not fulfill the memory requirement of the process. To achieve a degree of multiprogramming, we must reduce the waste of memory or fragmentation problem. In operating system two types of fragmentation:

Internal fragmentation:

Internal fragmentation occurs when memory blocks are allocated to the process more than their requested size. Due to this some unused space is leftover and creates an internal fragmentation problem.

Example: Suppose there is a fixed partitioning is used for memory allocation and the different size of block 3MB, 6MB, and 7MB space in memory. Now a new process p4 of size 2MB comes and demand for the block of memory. It gets a memory block of 3MB but 1MB block memory is a waste, and it can not be allocated to other processes too. This is called internal fragmentation.

External fragmentation:

In external fragmentation, we have a free memory block, but we can not assign it to process because blocks are not contiguous.

Example: Suppose (consider above example) three process p1, p2, p3 comes with size 2MB, 4MB, and 7MB respectively. Now they get memory blocks of size 3MB, 6MB, and 7MB allocated respectively. After allocating process p1 process and p2 process left 1MB and 2MB. Suppose a new process p4 comes and demands a 3MB block of memory, which is available, but we can not assign it because free memory space is not contiguous. This is called external fragmentation.

Both the first fit and best-fit systems for memory allocation affected by external fragmentation. To overcome the external fragmentation problem Compaction is used. In the compaction technique, all free memory space combines and makes one large block. So, this space can be used by other processes effectively.

Another possible solution to the external fragmentation is to allow the logical address space of the processes to be noncontiguous, thus permit a process to be allocated physical memory where ever the latter is available.

Paging:

Paging is a memory management scheme that eliminates the need for contiguous allocation of physical memory. This scheme permits the physical address space of a process to be non-contiguous.

Logical Address or Virtual Address (represented in bits): An address generated by the CPU

Logical Address Space or Virtual Address Space (represented in words or bytes): The set of all logical addresses generated by a program

Physical Address (represented in bits): An address actually available on a memory unit

Physical Address Space (represented in words or bytes): The set of all physical addresses corresponding to the logical addresses

Example:

If Logical Address = 31 bits, then Logical Address Space = 231 words = 2 G words (1 G = 230)

If Logical Address Space = 128 M words = 27 * 220 words, then Logical Address = log2 227 = 27 bits

If Physical Address = 22 bits, then Physical Address Space = 222 words = 4 M words (1 M = 220)

If Physical Address Space = 16 M words = 24 * 220 words, then Physical Address = log2 224 = 24 bits

The mapping from virtual to physical address is done by the memory management unit (MMU) which is a hardware device and this mapping is known as the paging technique.

The Physical Address Space is conceptually divided into several fixed-size blocks, called frames.

The Logical Address Space is also split into fixed-size blocks, called pages.

Page Size = Frame Size

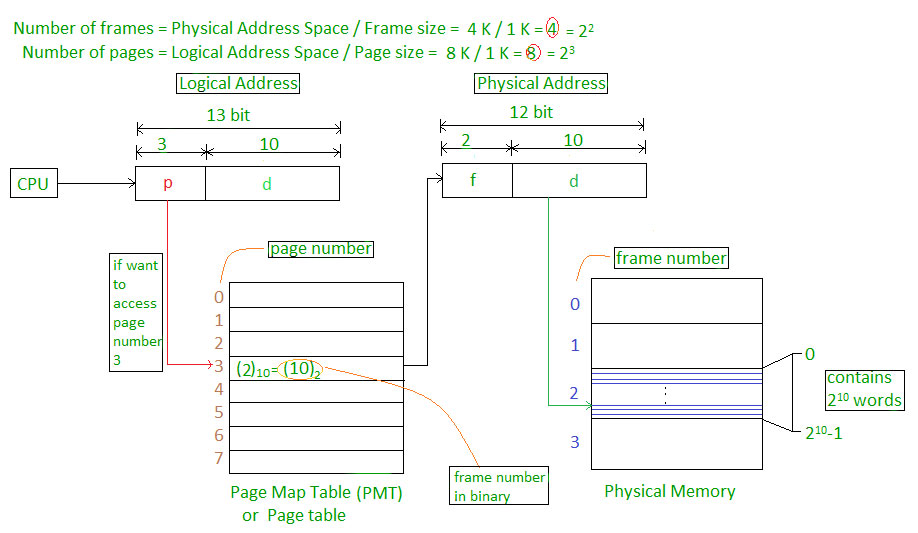

Let us consider an example:

Physical Address = 12 bits, then Physical Address Space = 4 K words

Logical Address = 13 bits, then Logical Address Space = 8 K words

Page size = frame size = 1 K words (assumption)

The address generated by the CPU is divided into

Page number(p): Number of bits required to represent the pages in Logical Address Space or Page number

Page offset(d): Number of bits required to represent a particular word in a page or page size of Logical Address Space or word number of a page or page offset.

Physical Address is divided into

Frame number(f): Number of bits required to represent the frame of Physical Address Space or Frame number frame

Frame offset(d): Number of bits required to represent a particular word in a frame or frame size of Physical Address Space or word number of a frame or frame offset.

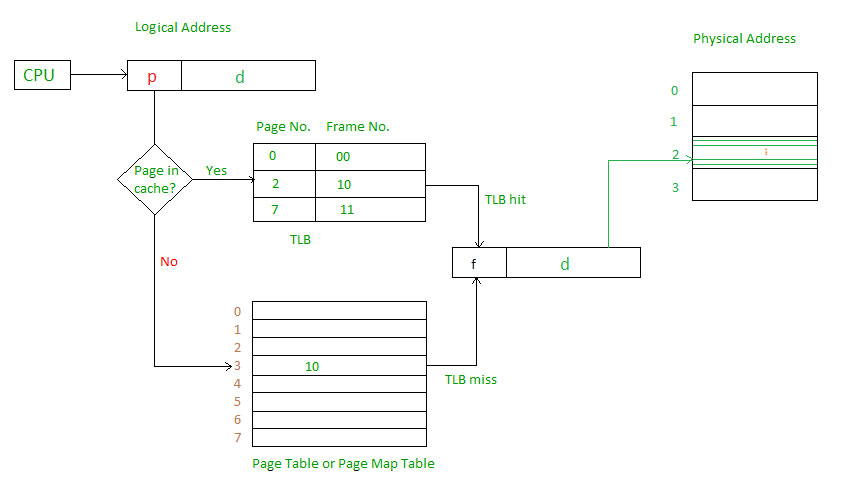

The hardware implementation of the page table can be done by using dedicated registers. But the usage of register for the page table is satisfactory only if the page table is small. If the page table contains a large number of entries then we can use TLB(translation Look-aside buffer), a special, small, fast look-up hardware cache.

The TLB is an associative, high-speed memory.

Each entry in TLB consists of two parts: a tag and a value.

When this memory is used, then an item is compared with all tags simultaneously. If the item is found, then the corresponding value is returned.

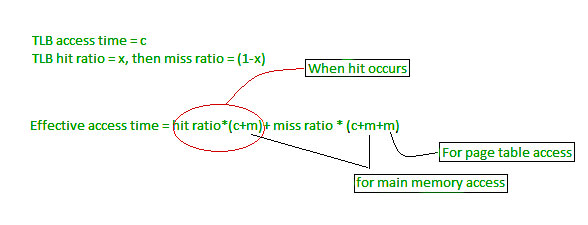

Main memory access time = m

Main memory access time = mIf page table are kept in main memory,

Effective access time = m(for page table) + m(for particular page in page table)

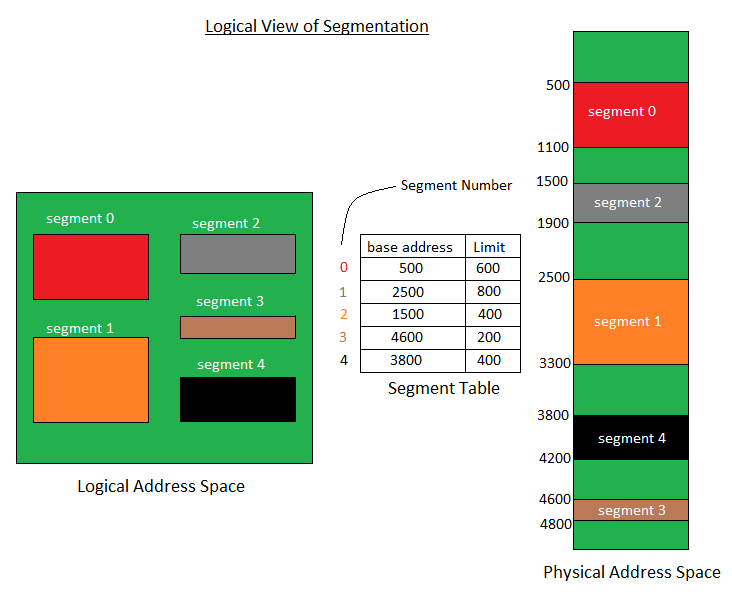

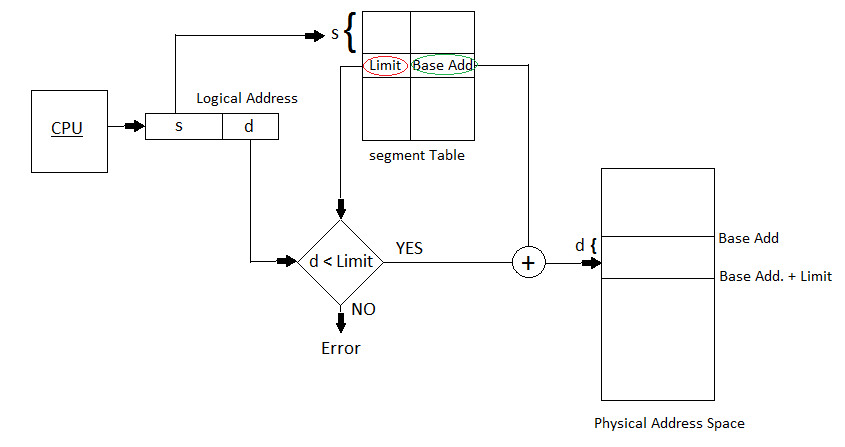

Segmentation in Operating System

A process is divided into Segments. The chunks that a program is divided into which are not necessarily all of the same sizes are called segments. Segmentation gives user’s view of the process which paging does not give. Here the user’s view is mapped to physical memory.

There are types of segmentation:

Virtual memory segmentation –

Each process is divided into a number of segments, not all of which are resident at any one point in time.

Simple segmentation –

Each process is divided into a number of segments, all of which are loaded into memory at run time, though not necessarily contiguously.

There is no simple relationship between logical addresses and physical addresses in segmentation. A table stores the information about all such segments and is called Segment Table.

Segment Table – It maps two-dimensional Logical address into one-dimensional Physical address. It’s each table entry has:

Base Address: It contains the starting physical address where the segments reside in memory.

Limit: It specifies the length of the segment.

Translation of Two dimensional Logical Address to one dimensional Physical Address.

Address generated by the CPU is divided into:

Address generated by the CPU is divided into:Segment number (s): Number of bits required to represent the segment.

Segment offset (d): Number of bits required to represent the size of the segment.

Advantages of Segmentation –

No Internal fragmentation.

Segment Table consumes less space in comparison to Page table in paging.

Disadvantage of Segmentation –

As processes are loaded and removed from the memory, the free memory space is broken into little pieces, causing External fragmentation

Virtual Memory in Operating System

Virtual Memory is a storage allocation scheme in which secondary memory can be addressed as though it were part of the main memory. The addresses a program may use to reference memory are distinguished from the addresses the memory system uses to identify physical storage sites, and program-generated addresses are translated automatically to the corresponding machine addresses.

The size of virtual storage is limited by the addressing scheme of the computer system and the amount of secondary memory is available not by the actual number of the main storage locations.

It is a technique that is implemented using both hardware and software. It maps memory addresses used by a program, called virtual addresses, into physical addresses in computer memory.

All memory references within a process are logical addresses that are dynamically translated into physical addresses at run time. This means that a process can be swapped in and out of the main memory such that it occupies different places in the main memory at different times during the course of execution.

A process may be broken into a number of pieces and these pieces need not be continuously located in the main memory during execution. The combination of dynamic run-time address translation and use of page or segment table permits this.

If these characteristics are present then, it is not necessary that all the pages or segments are present in the main memory during execution. This means that the required pages need to be loaded into memory whenever required. Virtual memory is implemented using Demand Paging or Demand Segmentation.

Demand Paging :

The process of loading the page into memory on demand (whenever page fault occurs) is known as demand paging.

The process includes the following steps :

If the CPU tries to refer to a page that is currently not available in the main memory, it generates an interrupt indicating a memory access fault.

The OS puts the interrupted process in a blocking state. For the execution to proceed the OS must bring the required page into the memory.

The OS will search for the required page in the logical address space.

The required page will be brought from logical address space to physical address space. The page replacement algorithms are used for the decision-making of replacing the page in physical address space.

The page table will be updated accordingly.

The signal will be sent to the CPU to continue the program execution and it will place the process back into the ready state.

Hence whenever a page fault occurs these steps are followed by the operating system and the required page is brought into memory.

Advantages :

More processes may be maintained in the main memory: Because we are going to load only some of the pages of any particular process, there is room for more processes. This leads to more efficient utilization of the processor because it is more likely that at least one of the more numerous processes will be in the ready state at any particular time.

A process may be larger than all of the main memory: One of the most fundamental restrictions in programming is lifted. A process larger than the main memory can be executed because of demand paging. The OS itself loads pages of a process in the main memory as required.

It allows greater multiprogramming levels by using less of the available (primary) memory for each process.

Page Fault Service Time :

The time taken to service the page fault is called page fault service time. The page fault service time includes the time taken to perform all the above six steps.

Let Main memory access time is: m

Page fault service time is: s

Page fault rate is : p

Then, Effective memory access time = (p*s) + (1-p)*m

Thrashing :

At any given time, only a few pages of any process are in the main memory and therefore more processes can be maintained in memory. Furthermore, time is saved because unused pages are not swapped in and out of memory. However, the OS must be clever about how it manages this scheme. In the steady-state practically, all of the main memory will be occupied with process pages, so that the processor and OS have direct access to as many processes as possible. Thus when the OS brings one page in, it must throw another out. If it throws out a page just before it is used, then it will just have to get that page again almost immediately. Too much of this leads to a condition called Thrashing. The system spends most of its time swapping pages rather than executing instructions. So a good page replacement algorithm is required.

In the given diagram, the initial degree of multiprogramming up to some extent of point(lambda), the CPU utilization is very high and the system resources are utilized 100%. But if we further increase the degree of multiprogramming the CPU utilization will drastically fall down and the system will spend more time only on the page replacement and the time is taken to complete the execution of the process will increase. This situation in the system is called thrashing.

Causes of Thrashing :

High degree of multiprogramming : If the number of processes keeps on increasing in the memory then the number of frames allocated to each process will be decreased. So, fewer frames will be available for each process. Due to this, a page fault will occur more frequently and more CPU time will be wasted in just swapping in and out of pages and the utilization will keep on decreasing.

For example:

Let free frames = 400

Case 1: Number of process = 100

Then, each process will get 4 frames.

Case 2: Number of processes = 400

Each process will get 1 frame.

Case 2 is a condition of thrashing, as the number of processes is increased, frames per process are decreased. Hence CPU time will be consumed in just swapping pages.

Lacks of Frames: If a process has fewer frames then fewer pages of that process will be able to reside in memory and hence more frequent swapping in and out will be required. This may lead to thrashing. Hence sufficient amount of frames must be allocated to each process in order to prevent thrashing.

Page Replacement Algorithms in Operating Systems

In an operating system that uses paging for memory management, a page replacement algorithm is needed to decide which page needs to be replaced when new page comes in.

Page Fault – A page fault happens when a running program accesses a memory page that is mapped into the virtual address space, but not loaded in physical memory.

Since actual physical memory is much smaller than virtual memory, page faults happen. In case of page fault, Operating System might have to replace one of the existing pages with the newly needed page. Different page replacement algorithms suggest different ways to decide which page to replace. The target for all algorithms is to reduce the number of page faults.

Page Replacement Algorithms :

1. First In First Out (FIFO) –

This is the simplest page replacement algorithm. In this algorithm, the operating system keeps track of all pages in the memory in a queue, the oldest page is in the front of the queue. When a page needs to be replaced page in the front of the queue is selected for removal.

Example-1Consider page reference string 1, 3, 0, 3, 5, 6 with 3 page frames.Find number of page faults.

Initially all slots are empty, so when 1, 3, 0 came they are allocated to the empty slots —> 3 Page Faults.

when 3 comes, it is already in memory so —> 0 Page Faults.

Then 5 comes, it is not available in memory so it replaces the oldest page slot i.e 1. —>1 Page Fault.

6 comes, it is also not available in memory so it replaces the oldest page slot i.e 3 —>1 Page Fault.

Finally when 3 come it is not available so it replaces 0 1 page fault

Belady’s anomaly – Belady’s anomaly proves that it is possible to have more page faults when increasing the number of page frames while using the First in First Out (FIFO) page replacement algorithm. For example, if we consider reference string 3, 2, 1, 0, 3, 2, 4, 3, 2, 1, 0, 4 and 3 slots, we get 9 total page faults, but if we increase slots to 4, we get 10 page faults.

2. Optimal Page replacement –

In this algorithm, pages are replaced which would not be used for the longest duration of time in the future.

Example-2:Consider the page references 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2, with 4 page frame. Find number of page fault.

Initially all slots are empty, so when 7 0 1 2 are allocated to the empty slots —> 4 Page faults

0 is already there so —> 0 Page fault.

when 3 came it will take the place of 7 because it is not used for the longest duration of time in the future.—>1 Page fault.

0 is already there so —> 0 Page fault..

4 will takes place of 1 —> 1 Page Fault.

Now for the further page reference string —> 0 Page fault because they are already available in the memory.

Optimal page replacement is perfect, but not possible in practice as the operating system cannot know future requests. The use of Optimal Page replacement is to set up a benchmark so that other replacement algorithms can be analyzed against it.

3. Least Recently Used –

In this algorithm page will be replaced which is least recently used.

Example-3Consider the page reference string 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2 with 4 page frames.Find number of page faults.

Initially all slots are empty, so when 7 0 1 2 are allocated to the empty slots —> 4 Page faults

0 is already their so —> 0 Page fault.

when 3 came it will take the place of 7 because it is least recently used —>1 Page fault

0 is already in memory so —> 0 Page fault.

Allocation of frames in Operating System

An important aspect of operating systems, virtual memory is implemented using demand paging. Demand paging necessitates the development of a page-replacement algorithm and a frame allocation algorithm. Frame allocation algorithms are used if you have multiple processes; it helps decide how many frames to allocate to each process.

There are various constraints to the strategies for the allocation of frames:

You cannot allocate more than the total number of available frames.

At least a minimum number of frames should be allocated to each process. This constraint is supported by two reasons. The first reason is, as less number of frames are allocated, there is an increase in the page fault ratio, decreasing the performance of the execution of the process. Secondly, there should be enough frames to hold all the different pages that any single instruction can reference.

Frame allocation algorithms –

The two algorithms commonly used to allocate frames to a process are:

Equal allocation: In a system with x frames and y processes, each process gets equal number of frames, i.e. x/y. For instance, if the system has 48 frames and 9 processes, each process will get 5 frames. The three frames which are not allocated to any process can be used as a free-frame buffer pool.

Disadvantage: In systems with processes of varying sizes, it does not make much sense to give each process equal frames. Allocation of a large number of frames to a small process will eventually lead to the wastage of a large number of allocated unused frames.

Proportional allocation: Frames are allocated to each process according to the process size.

For a process pi of size si, the number of allocated frames is ai = (si/S)*m, where S is the sum of the sizes of all the processes and m is the number of frames in the system. For instance, in a system with 62 frames, if there is a process of 10KB and another process of 127KB, then the first process will be allocated (10/137)*62 = 4 frames and the other process will get (127/137)*62 = 57 frames.

Advantage: All the processes share the available frames according to their needs, rather than equally.

Global vs Local Allocation –

The number of frames allocated to a process can also dynamically change depending on whether you have used global replacement or local replacement for replacing pages in case of a page fault.

Local replacement: When a process needs a page which is not in the memory, it can bring in the new page and allocate it a frame from its own set of allocated frames only.

Advantage: The pages in memory for a particular process and the page fault ratio is affected by the paging behavior of only that process.

Disadvantage: A low priority process may hinder a high priority process by not making its frames available to the high priority process.

Global replacement: When a process needs a page which is not in the memory, it can bring in the new page and allocate it a frame from the set of all frames, even if that frame is currently allocated to some other process; that is, one process can take a frame from another.

Advantage: Does not hinder the performance of processes and hence results in greater system throughput.

Disadvantage: The page fault ratio of a process can not be solely controlled by the process itself. The pages in memory for a process depends on the paging behavior of other processes as well.

UNIT 2

Process Concept

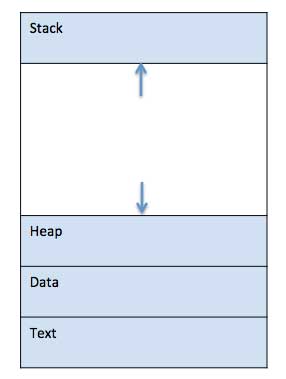

A process is basically a program in execution. The execution of a process must progress in a sequential fashion.

A process is defined as an entity which represents the basic unit of work to be implemented in the system.

To put it in simple terms, we write our computer programs in a text file and when we execute this program, it becomes a process which performs all the tasks mentioned in the program.

When a program is loaded into the memory and it becomes a process, it can be divided into four sections ─ stack, heap, text and data. The following image shows a simplified layout of a process inside main memory −

S.N.Component & Description

1

Stack

The process Stack contains the temporary data such as method/function parameters, return address and local variables.

2

Heap

This is dynamically allocated memory to a process during its run time.

3

Text

This includes the current activity represented by the value of Program Counter and the contents of the processor's registers.

4

Data

This section contains the global and static variables.

Process Scheduling

Definition

The process scheduling is the activity of the process manager that handles the removal of the running process from the CPU and the selection of another process on the basis of a particular strategy.

Process scheduling is an essential part of a Multiprogramming operating systems. Such operating systems allow more than one process to be loaded into the executable memory at a time and the loaded process shares the CPU using time multiplexing.

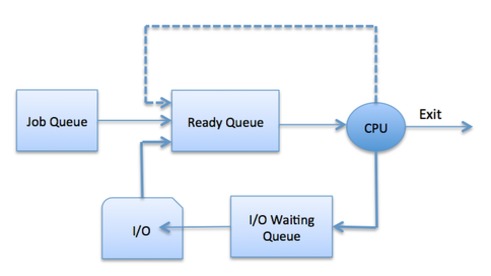

Process Scheduling Queues

The OS maintains all PCBs in Process Scheduling Queues. The OS maintains a separate queue for each of the process states and PCBs of all processes in the same execution state are placed in the same queue. When the state of a process is changed, its PCB is unlinked from its current queue and moved to its new state queue.

The Operating System maintains the following important process scheduling queues −

Job queue − This queue keeps all the processes in the system.

Ready queue − This queue keeps a set of all processes residing in main memory, ready and waiting to execute. A new process is always put in this queue.

Device queues − The processes which are blocked due to unavailability of an I/O device constitute this queue.

The OS can use different policies to manage each queue (FIFO, Round Robin, Priority, etc.). The OS scheduler determines how to move processes between the ready and run queues which can only have one entry per processor core on the system; in the above diagram, it has been merged with the CPU.

Two-State Process Model

Two-state process model refers to running and non-running states which are described below −

S.N.State & Description

1

Running

When a new process is created, it enters into the system as in the running state.

2

Not Running

Processes that are not running are kept in queue, waiting for their turn to execute. Each entry in the queue is a pointer to a particular process. Queue is implemented by using linked list. Use of dispatcher is as follows. When a process is interrupted, that process is transferred in the waiting queue. If the process has completed or aborted, the process is discarded. In either case, the dispatcher then selects a process from the queue to execute.

Schedulers

Schedulers are special system software which handle process scheduling in various ways. Their main task is to select the jobs to be submitted into the system and to decide which process to run. Schedulers are of three types −

Long-Term Scheduler

Short-Term Scheduler

Medium-Term Scheduler

Long Term Scheduler

It is also called a job scheduler. A long-term scheduler determines which programs are admitted to the system for processing. It selects processes from the queue and loads them into memory for execution. Process loads into the memory for CPU scheduling.

The primary objective of the job scheduler is to provide a balanced mix of jobs, such as I/O bound and processor bound. It also controls the degree of multiprogramming. If the degree of multiprogramming is stable, then the average rate of process creation must be equal to the average departure rate of processes leaving the system.

On some systems, the long-term scheduler may not be available or minimal. Time-sharing operating systems have no long term scheduler. When a process changes the state from new to ready, then there is use of long-term scheduler.

Short Term Scheduler

It is also called as CPU scheduler. Its main objective is to increase system performance in accordance with the chosen set of criteria. It is the change of ready state to running state of the process. CPU scheduler selects a process among the processes that are ready to execute and allocates CPU to one of them.

Short-term schedulers, also known as dispatchers, make the decision of which process to execute next. Short-term schedulers are faster than long-term schedulers.

Medium Term Scheduler

Medium-term scheduling is a part of swapping. It removes the processes from the memory. It reduces the degree of multiprogramming. The medium-term scheduler is in-charge of handling the swapped out-processes.

A running process may become suspended if it makes an I/O request. A suspended processes cannot make any progress towards completion. In this condition, to remove the process from memory and make space for other processes, the suspended process is moved to the secondary storage. This process is called swapping, and the process is said to be swapped out or rolled out. Swapping may be necessary to improve the process mix.

Comparison among Scheduler

S.N.Long-Term SchedulerShort-Term SchedulerMedium-Term Scheduler

1 It is a job scheduler It is a CPU scheduler It is a process swapping scheduler.

2 Speed is lesser than short term scheduler Speed is fastest among other two Speed is in between both short and long term scheduler.

3 It controls the degree of multiprogramming It provides lesser control over degree of multiprogramming It reduces the degree of multiprogramming.

4 It is almost absent or minimal in time sharing system It is also minimal in time sharing system It is a part of Time sharing systems.

5 It selects processes from pool and loads them into memory for execution It selects those processes which are ready to execute It can re-introduce the process into memory and execution can be continued.

Scheduling algorithms

A Process Scheduler schedules different processes to be assigned to the CPU based on particular scheduling algorithms. There are six popular process scheduling algorithms which we are going to discuss in this chapter −

First-Come, First-Served (FCFS) Scheduling

Shortest-Job-Next (SJN) Scheduling

Priority Scheduling

Shortest Remaining Time

Round Robin(RR) Scheduling

Multiple-Level Queues Scheduling

These algorithms are either non-preemptive or preemptive. Non-preemptive algorithms are designed so that once a process enters the running state, it cannot be preempted until it completes its allotted time, whereas the preemptive scheduling is based on priority where a scheduler may preempt a low priority running process anytime when a high priority process enters into a ready state.

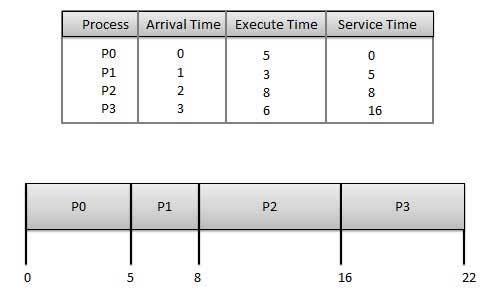

First Come First Serve (FCFS)

Jobs are executed on first come, first serve basis.

It is a non-preemptive, pre-emptive scheduling algorithm.

Easy to understand and implement.

Its implementation is based on FIFO queue.

Poor in performance as average wait time is high.

Wait time of each process is as follows −

ProcessWait Time : Service Time - Arrival Time

P0 0 - 0 = 0

P1 5 - 1 = 4

P2 8 - 2 = 6

P3 16 - 3 = 13

Average Wait Time: (0+4+6+13) / 4 = 5.75

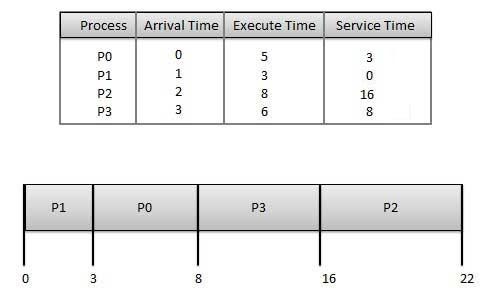

Shortest Job Next (SJN)

This is also known as shortest job first, or SJF

This is a non-preemptive, pre-emptive scheduling algorithm.

Best approach to minimize waiting time.

Easy to implement in Batch systems where required CPU time is known in advance.

Impossible to implement in interactive systems where required CPU time is not known.

The processer should know in advance how much time process will take.

Given: Table of processes, and their Arrival time, Execution time

ProcessArrival TimeExecution TimeService Time

P0 0 5 0

P1 1 3 5

P2 2 8 14

P3 3 6 8

Waiting time of each process is as follows −

ProcessWaiting Time

P0 0 - 0 = 0

P1 5 - 1 = 4

P2 14 - 2 = 12

P3 8 - 3 = 5

Average Wait Time: (0 + 4 + 12 + 5)/4 = 21 / 4 = 5.25

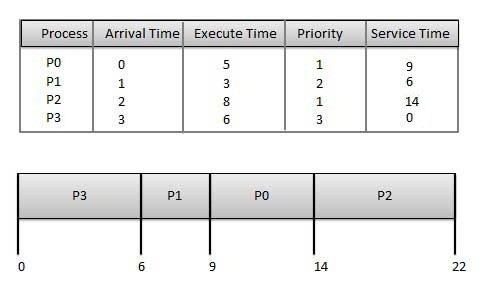

Priority Based Scheduling

Priority scheduling is a non-preemptive algorithm and one of the most common scheduling algorithms in batch systems.

Each process is assigned a priority. Process with highest priority is to be executed first and so on.

Processes with same priority are executed on first come first served basis.

Priority can be decided based on memory requirements, time requirements or any other resource requirement.

Given: Table of processes, and their Arrival time, Execution time, and priority. Here we are considering 1 is the lowest priority.

ProcessArrival TimeExecution TimePriorityService Time

P0 0 5 1 0

P1 1 3 2 11

P2 2 8 1 14

P3 3 6 3 5

Waiting time of each process is as follows −

ProcessWaiting Time

P0 0 - 0 = 0

P1 11 - 1 = 10

P2 14 - 2 = 12

P3 5 - 3 = 2

Average Wait Time: (0 + 10 + 12 + 2)/4 = 24 / 4 = 6

Shortest Remaining Time

Shortest remaining time (SRT) is the preemptive version of the SJN algorithm.

The processor is allocated to the job closest to completion but it can be preempted by a newer ready job with shorter time to completion.

Impossible to implement in interactive systems where required CPU time is not known.

It is often used in batch environments where short jobs need to give preference.

Round Robin Scheduling

Round Robin is the preemptive process scheduling algorithm.

Each process is provided a fix time to execute, it is called a quantum.

Once a process is executed for a given time period, it is preempted and other process executes for a given time period.

Context switching is used to save states of preempted processes.

Wait time of each process is as follows −

ProcessWait Time : Service Time - Arrival Time

P0 (0 - 0) + (12 - 3) = 9

P1 (3 - 1) = 2

P2 (6 - 2) + (14 - 9) + (20 - 17) = 12

P3 (9 - 3) + (17 - 12) = 11

Average Wait Time: (9+2+12+11) / 4 = 8.5

Multiple-Level Queues Scheduling

Multiple-level queues are not an independent scheduling algorithm. They make use of other existing algorithms to group and schedule jobs with common characteristics.

Multiple queues are maintained for processes with common characteristics.

Each queue can have its own scheduling algorithms.

Priorities are assigned to each queue.

For example, CPU-bound jobs can be scheduled in one queue and all I/O-bound jobs in another queue. The Process Scheduler then alternately selects jobs from each queue and assigns them to the CPU based on the algorithm assigned to the queue.

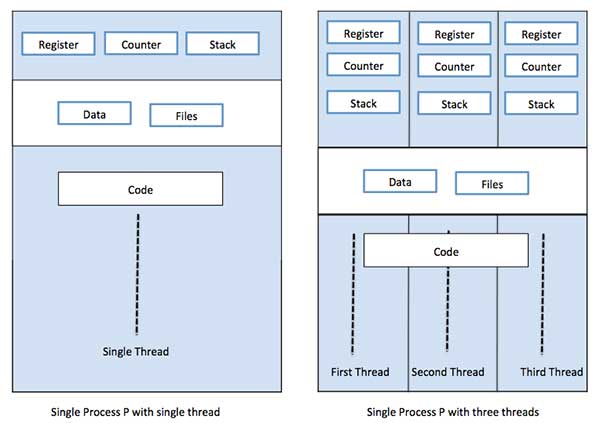

Multi-Threading

What is Thread?

A thread is a flow of execution through the process code, with its own program counter that keeps track of which instruction to execute next, system registers which hold its current working variables, and a stack which contains the execution history.

A thread shares with its peer threads few information like code segment, data segment and open files. When one thread alters a code segment memory item, all other threads see that.

A thread is also called a lightweight process. Threads provide a way to improve application performance through parallelism. Threads represent a software approach to improving performance of operating system by reducing the overhead thread is equivalent to a classical process.

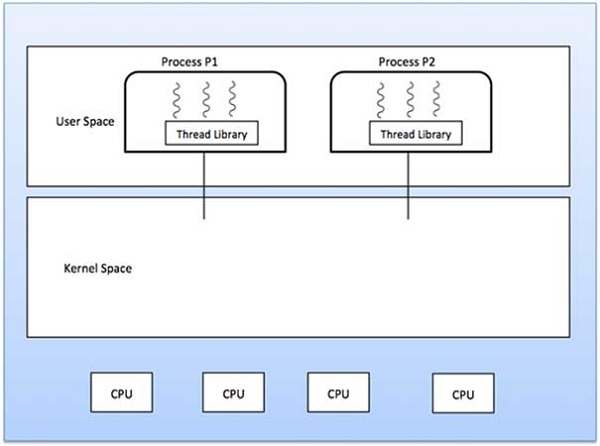

Each thread belongs to exactly one process and no thread can exist outside a process. Each thread represents a separate flow of control. Threads have been successfully used in implementing network servers and web server. They also provide a suitable foundation for parallel execution of applications on shared memory multiprocessors. The following figure shows the working of a single-threaded and a multithreaded process.

Difference between Process and Thread

S.N.ProcessThread

1 Process is heavy weight or resource intensive. Thread is light weight, taking lesser resources than a process.

2 Process switching needs interaction with operating system. Thread switching does not need to interact with operating system.

3 In multiple processing environments, each process executes the same code but has its own memory and file resources. All threads can share same set of open files, child processes.

4 If one process is blocked, then no other process can execute until the first process is unblocked. While one thread is blocked and waiting, a second thread in the same task can run.

5 Multiple processes without using threads use more resources. Multiple threaded processes use fewer resources.

6 In multiple processes each process operates independently of the others. One thread can read, write or change another thread's data.

Advantages of Thread

Threads minimize the context switching time.

Use of threads provides concurrency within a process.

Efficient communication.

It is more economical to create and context switch threads.

Threads allow utilization of multiprocessor architectures to a greater scale and efficiency.

Types of Thread

Threads are implemented in following two ways −

User Level Threads − User managed threads.

Kernel Level Threads − Operating System managed threads acting on kernel, an operating system core.

User Level Threads

In this case, the thread management kernel is not aware of the existence of threads. The thread library contains code for creating and destroying threads, for passing message and data between threads, for scheduling thread execution and for saving and restoring thread contexts. The application starts with a single thread.

Advantages

Thread switching does not require Kernel mode privileges.

User level thread can run on any operating system.

Scheduling can be application specific in the user level thread.

User level threads are fast to create and manage.

Disadvantages

In a typical operating system, most system calls are blocking.

Multithreaded application cannot take advantage of multiprocessing.

Process Synchronization

On the basis of synchronization, processes are categorized as one of the following two types:

Independent Process : Execution of one process does not affects the execution of other processes.

Cooperative Process : Execution of one process affects the execution of other processes.

Process synchronization problem arises in the case of Cooperative process also because resources are shared in Cooperative processes.

Race Condition

When more than one processes are executing the same code or accessing the same memory or any shared variable in that condition there is a possibility that the output or the value of the shared variable is wrong so for that all the processes doing the race to say that my output is correct this condition known as a race condition. Several processes access and process the manipulations over the same data concurrently, then the outcome depends on the particular order in which the access takes place.

A race condition is a situation that may occur inside a critical section. This happens when the result of multiple thread execution in the critical section differs according to the order in which the threads execute.

Race conditions in critical sections can be avoided if the critical section is treated as an atomic instruction. Also, proper thread synchronization using locks or atomic variables can prevent race conditions.

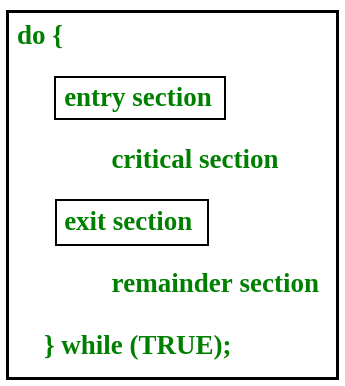

Critical Section Problem

Critical section is a code segment that can be accessed by only one process at a time. Critical section contains shared variables which need to be synchronized to maintain consistency of data variables.

In the entry section, the process requests for entry in the Critical Section.

Any solution to the critical section problem must satisfy three requirements:

Mutual Exclusion : If a process is executing in its critical section, then no other process is allowed to execute in the critical section.

Progress : If no process is executing in the critical section and other processes are waiting outside the critical section, then only those processes that are not executing in their remainder section can participate in deciding which will enter in the critical section next, and the selection can not be postponed indefinitely.

Bounded Waiting : A bound must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted.

Critical Section in Synchronization

Critical Section:

When more than one processes access a same code segment that segment is known as critical section. Critical section contains shared variables or resources which are needed to be synchronized to maintain consistency of data variable.

In simple terms a critical section is group of instructions/statements or region of code that need to be executed atomically (read this post for atomicity), such as accessing a resource (file, input or output port, global data, etc.).

In concurrent programming, if one thread tries to change the value of shared data at the same time as another thread tries to read the value (i.e. data race across threads), the result is unpredictable.

The access to such shared variable (shared memory, shared files, shared port, etc…) to be synchronized. Few programming languages have built-in support for synchronization.

Semaphores in Process Synchronization

Semaphore was proposed by Dijkstra in 1965 which is a very significant technique to manage concurrent processes by using a simple integer value, which is known as a semaphore. Semaphore is simply an integer variable that is shared between threads. This variable is used to solve the critical section problem and to achieve process synchronization in the multiprocessing environment.

Semaphores are of two types:

Binary Semaphore –

This is also known as mutex lock. It can have only two values – 0 and 1. Its value is initialized to 1. It is used to implement the solution of critical section problems with multiple processes.

Counting Semaphore –

Its value can range over an unrestricted domain. It is used to control access to a resource that has multiple instances.

First, look at two operations that can be used to access and change the value of the semaphore variable.

Problem in this implementation of semaphore :

The main problem with semaphores is that they require busy waiting, If a process is in the critical section, then other processes trying to enter critical section will be waiting until the critical section is not occupied by any process.

Whenever any process waits then it continuously checks for semaphore value (look at this line while (s==0); in P operation) and waste CPU cycle.

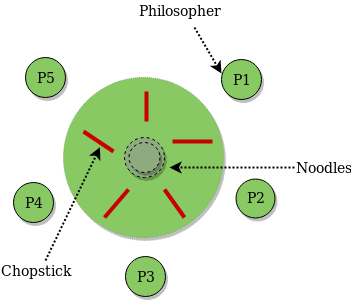

Dining Philosopher Problem Using Semaphores

The Dining Philosopher Problem – The Dining Philosopher Problem states that K philosophers seated around a circular table with one chopstick between each pair of philosophers. There is one chopstick between each philosopher. A philosopher may eat if he can pick up the two chopsticks adjacent to him. One chopstick may be picked up by any one of its adjacent followers but not both.

Semaphore Solution to Dining Philosopher –

Each philosopher is represented by the following pseudocode:

process P[i]

while true do

{ THINK;

PICKUP(CHOPSTICK[i], CHOPSTICK[i+1 mod 5]);

EAT;

PUTDOWN(CHOPSTICK[i], CHOPSTICK[i+1 mod 5])

}

There are three states of the philosopher: THINKING, HUNGRY, and EATING. Here there are two semaphores: Mutex and a semaphore array for the philosophers. Mutex is used such that no two philosophers may access the pickup or putdown at the same time. The array is used to control the behavior of each philosopher. But, semaphores can result in deadlock due to programming errors.

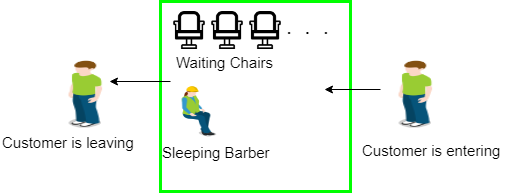

Sleeping Barber problem in Process Synchronization

Problem : The analogy is based upon a hypothetical barber shop with one barber. There is a barber shop which has one barber, one barber chair, and n chairs for waiting for customers if there are any to sit on the chair.

If there is no customer, then the barber sleeps in his own chair.

When a customer arrives, he has to wake up the barber.

If there are many customers and the barber is cutting a customer’s hair, then the remaining customers either wait if there are empty chairs in the waiting room or they leave if no chairs are empty.

Solution : The solution to this problem includes three semaphores.First is for the customer which counts the number of customers present in the waiting room (customer in the barber chair is not included because he is not waiting). Second, the barber 0 or 1 is used to tell whether the barber is idle or is working, And the third mutex is used to provide the mutual exclusion which is required for the process to execute. In the solution, the customer has the record of the number of customers waiting in the waiting room if the number of customers is equal to the number of chairs in the waiting room then the upcoming customer leaves the barbershop.

Introduction of Deadlock in Operating System

Deadlock is a situation where a set of processes are blocked because each process is holding a resource and waiting for another resource acquired by some other process.

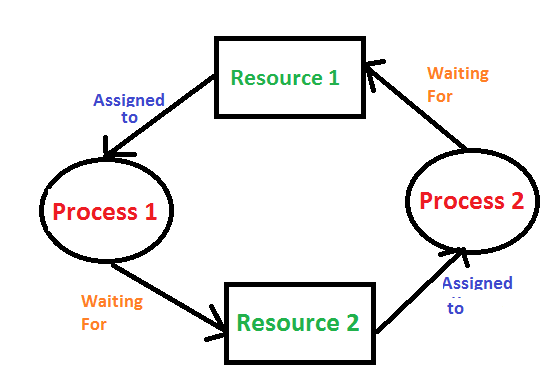

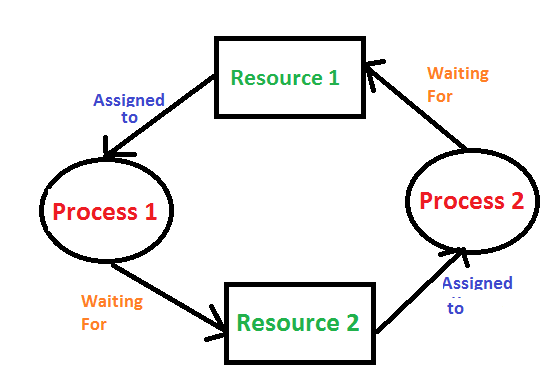

Consider an example when two trains are coming toward each other on the same track and there is only one track, none of the trains can move once they are in front of each other. A similar situation occurs in operating systems when there are two or more processes that hold some resources and wait for resources held by other(s). For example, in the below diagram, Process 1 is holding Resource 1 and waiting for resource 2 which is acquired by process 2, and process 2 is waiting for resource 1.

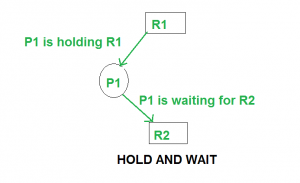

Deadlock can arise if the following four conditions hold simultaneously (Necessary Conditions)

Mutual Exclusion: One or more than one resource are non-shareable (Only one process can use at a time)

Hold and Wait: A process is holding at least one resource and waiting for resources.

No Preemption: A resource cannot be taken from a process unless the process releases the resource.

Circular Wait: A set of processes are waiting for each other in circular form.

Methods for handling deadlock

There are three ways to handle deadlock

1) Deadlock prevention or avoidance: The idea is to not let the system into a deadlock state.

One can zoom into each category individually, Prevention is done by negating one of above mentioned necessary conditions for deadlock.

Avoidance is kind of futuristic in nature. By using strategy of “Avoidance”, we have to make an assumption. We need to ensure that all information about resources which process will need are known to us prior to execution of the process. We use Banker’s algorithm (Which is in-turn a gift from Dijkstra) in order to avoid deadlock.

2) Deadlock detection and recovery: Let deadlock occur, then do preemption to handle it once occurred.

3) Ignore the problem altogether: If deadlock is very rare, then let it happen and reboot the system. This is the approach that both Windows and UNIX take.

Deadlock Detection Algorithm

If a system does not employ either a deadlock prevention or deadlock avoidance algorithm then a deadlock situation may occur. In this case-

Apply an algorithm to examine state of system to determine whether deadlock has occurred or not.

Apply an algorithm to recover from the deadlock. For more refer- Deadlock Recovery

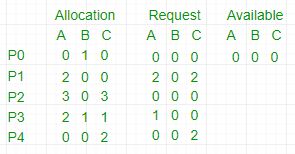

Deadlock Avoidance Algorithm/ Bankers Algorithm:

The algorithm employs several times varying data structures:

Available –

A vector of length m indicates the number of available resources of each type.

Allocation –

An n*m matrix defines the number of resources of each type currently allocated to a process. Column represents resource and rows represent process.

Request –

An n*m matrix indicates the current request of each process. If request[i][j] equals k then process Pi is requesting k more instances of resource type Rj.

This algorithm has already been discussed here

Now, Bankers algorithm includes a Safety Algorithm / Deadlock Detection Algorithm

The algorithm for finding out whether a system is in a safe state can be described as follows:

Steps of Algorithm:

Let Work and Finish be vectors of length m and n respectively. Initialize Work= Available. For i=0, 1, …., n-1, if Requesti = 0, then Finish[i] = true; otherwise, Finish[i]= false.

Find an index i such that both

a) Finish[i] == false

b) Requesti <= Work

If no such i exists go to step 4.

Work= Work+ Allocationi

Finish[i]= true

Go to Step 2.

If Finish[i]== false for some i, 0<=i<n, then the system is in a deadlocked state. Moreover, if Finish[i]==false the process Pi is deadlocked.

For example,

In this, Work = [0, 0, 0] &

Finish = [false, false, false, false, false]

i=0 is selected as both Finish[0] = false and [0, 0, 0]<=[0, 0, 0].

Work =[0, 0, 0]+[0, 1, 0] =>[0, 1, 0] &

Finish = [true, false, false, false, false].

i=2 is selected as both Finish[2] = false and [0, 0, 0]<=[0, 1, 0].

Work =[0, 1, 0]+[3, 0, 3] =>[3, 1, 3] &

Finish = [true, false, true, false, false].

i=1 is selected as both Finish[1] = false and [2, 0, 2]<=[3, 1, 3].

Work =[3, 1, 3]+[2, 0, 0] =>[5, 1, 3] &

Finish = [true, true, true, false, false].

i=3 is selected as both Finish[3] = false and [1, 0, 0]<=[5, 1, 3].

Work =[5, 1, 3]+[2, 1, 1] =>[7, 2, 4] &

Finish = [true, true, true, true, false].

i=4 is selected as both Finish[4] = false and [0, 0, 2]<=[7, 2, 4].

Work =[7, 2, 4]+[0, 0, 2] =>[7, 2, 6] &

Finish = [true, true, true, true, true].

Since Finish is a vector of all true it means there is no deadlock in this example.

Deadlock Detection And Recovery

Deadlock Detection :

1. If resources have a single instance –

In this case for Deadlock detection, we can run an algorithm to check for the cycle in the Resource Allocation Graph. The presence of a cycle in the graph is a sufficient condition for deadlock.

2. In the above diagram, resource 1 and resource 2 have single instances. There is a cycle R1 → P1 → R2 → P2. So, Deadlock is Confirmed.

3. If there are multiple instances of resources –

Detection of the cycle is necessary but not sufficient condition for deadlock detection, in this case, the system may or may not be in deadlock varies according to different situations.

Deadlock Recovery :

A traditional operating system such as Windows doesn’t deal with deadlock recovery as it is a time and space-consuming process. Real-time operating systems use Deadlock recovery.

Killing the process –

Killing all the processes involved in the deadlock. Killing process one by one. After killing each process check for deadlock again keep repeating the process till the system recovers from deadlock. Killing all the processes one by one helps a system to break circular wait condition.

Resource Preemption –

Resources are preempted from the processes involved in the deadlock, preempted resources are allocated to other processes so that there is a possibility of recovering the system from deadlock. In this case, the system goes into starvation.

Deadlock Prevention And Avoidance

Deadlock Characteristics

As discussed in the previous post, deadlock has following characteristics.

Mutual Exclusion

Hold and Wait

No preemption

Circular wait

Deadlock Prevention

We can prevent Deadlock by eliminating any of the above four conditions.

Eliminate Mutual Exclusion

It is not possible to dis-satisfy the mutual exclusion because some resources, such as the tape drive and printer, are inherently non-shareable.

Eliminate Hold and wait

Allocate all required resources to the process before the start of its execution, this way hold and wait condition is eliminated but it will lead to low device utilization. for example, if a process requires printer at a later time and we have allocated printer before the start of its execution printer will remain blocked till it has completed its execution.

The process will make a new request for resources after releasing the current set of resources. This solution may lead to starvation.

Eliminate No Preemption

Preempt resources from the process when resources required by other high priority processes.

Eliminate Circular Wait

Each resource will be assigned with a numerical number. A process can request the resources increasing/decreasing. order of numbering.

For Example, if P1 process is allocated R5 resources, now next time if P1 ask for R4, R3 lesser than R5 such request will not be granted, only request for resources more than R5 will be granted.

Deadlock Avoidance

Deadlock avoidance can be done with Banker’s Algorithm.

Banker’s Algorithm

Bankers’s Algorithm is resource allocation and deadlock avoidance algorithm which test all the request made by processes for resources, it checks for the safe state, if after granting request system remains in the safe state it allows the request and if there is no safe state it doesn’t allow the request made by the process.

Inputs to Banker’s Algorithm:

Max need of resources by each process.

Currently, allocated resources by each process.

Max free available resources in the system.

The request will only be granted under the below condition:

If the request made by the process is less than equal to max need to that process.

If the request made by the process is less than equal to the freely available resource in the system.

Example: Total resources in system:

A B C D

6 5 7 6

Available system resources are:

A B C D

3 1 1 2

Processes (currently allocated resources):

A B C D

P1 1 2 2 1

P2 1 0 3 3

P3 1 2 1 0

Processes (maximum resources):

A B C D

P1 3 3 2 2

P2 1 2 3 4

P3 1 3 5 0

Need = maximum resources - currently allocated resources.

Processes (need resources):

A B C D

P1 2 1 0 1

P2 0 2 0 1

P3 0 1 4 0

Note: Deadlock prevention is more strict than Deadlock Avoidance.

UNIT 3

Device Management

Device management in an operating system means controlling the Input/Output

devices like disk, microphone, keyboard, printer, magnetic tape, USB ports,

camcorder, scanner, other accessories, and supporting units like supporting

units control channels. A process may require various resources, including

main memory, file access, and access to disk drives, and others. If resources

are available, they could be allocated, and control returned to the CPU.

Otherwise, the procedure would have to be postponed until adequate

resources become available. The system has multiple devices, and in order to

handle these physical or virtual devices, the operating system requires a separate

program known as an ad device controller. It also determines whether the

requested device is available.

The fundamentals of I/O devices may be divided into three categories:

Boot Device

Character Device

Network Device

Boot Device

It stores data in fixed-size blocks, each with its unique address.

For example- Disks.

Character Device

It transmits or accepts a stream of characters, none of which can be addressed

individually. For instance, keyboards, printers, etc.

Network Device

It is used for transmitting the data packets.

Functions of the device management in the operating system

The operating system (OS) handles communication with the devices via their drivers. The OS component gives a uniform interface for accessing devices with various physical features. There are various functions of device management in the operating system. Some of them are as follows:

It keeps track of data, status, location, uses, etc. The file system is a term used to define a group of facilities.

It enforces the pre-determined policies and decides which process receives the device when and for how long.

It improves the performance of specific devices.

It monitors the status of every device, including printers, storage drivers, and other devices.

It allocates and effectively deallocates the device. De-allocating differentiates the devices at two levels: first, when an I/O command is issued and temporarily freed. Second, when the job is completed, and the device is permanently release

Types of devices

There are three types of Operating system peripheral devices: dedicated, shared, and virtual. These are as follows:

1. Dedicated Device

In device management, some devices are allocated or assigned to only one

task at a time until that job releases them. Devices such as plotters, printers,

tape drivers, and other similar devices necessitate such an allocation

mechanism because it will be inconvenient if multiple people share them

simultaneously. The disadvantage of such devices is the inefficiency caused

by allocating the device to a single user for the whole duration of task execution,

even if the device is not used 100% of the time.

2. Shared Devices

These devices could be assigned to a variety of processes.

By interleaving their requests, disk-DASD could be shared by multiple

processes simultaneously. The Device Manager carefully controls the

interleaving, and pre-determined policies must resolve all difficulties.

3. Virtual Devices

Virtual devices are a hybrid of the two devices, and they are dedicated devices

that have been transformed into shared devices. For example, a printer can be

transformed into a shareable device by using a spooling program that redirects all print requests to a disk. A print job is not sent directly to the printer; however, it is routed to the disk until it is fully prepared with all of the required sequences and formatting, at which point it is transmitted to the printers. The approach can transform a single printer into

numerous virtual printers, improving performance and ease of use.

Features of Device Management

Here, you will learn the features of device management in the operating system. Various features of the device management are as follows:

The OS interacts with the device controllers via the device drivers while allocating the device to the multiple processes executing on the system.

Device drivers can also be thought of as system software programs that bridge processes and device controllers.

The device management function's other key job is to implement the API.

Device drivers are software programs that allow an operating system to control the operation of numerous devices effectively.

The device controller used in device management operations mainly contains three registers: command, status, and data.

Buffering in Operating System

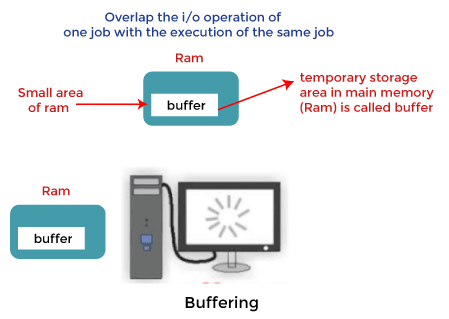

The buffer is an area in the main memory used to store or hold the data temporarily.

In other words, buffer temporarily stores data transmitted from one place to another